Revolutionizing Code Representation: The Power of Code Embeddings

Transform your code snippets into dense vectors for enhanced AI-driven programming with code embeddings. Similar to word embeddings in NLP, code embeddings enable machines to understand and manipulate code more efficiently by capturing semantic relationships.

Unlocking the Potential of Code Embeddings

Code embeddings convert complex code structures into numerical vectors, capturing the essence and functionality of the code. Unlike traditional methods, embeddings focus on semantic relationships between code components, facilitating tasks like code search, completion, and bug detection.

Imagine two Python functions that may appear different but carry out the same operation. A robust code embedding would represent these functions as similar vectors, highlighting their functional similarity despite textual discrepancies.

Crafting Code Embeddings: A Deep Dive

Dive into the realm of code embeddings creation, where neural networks analyze code snippets, syntax, and comments to learn relationships between them. The journey involves treating code as sequences, training neural networks, and capturing similarities between code snippets.

Get a glimpse of how code snippets can be preprocessed for embedding in Python:

import ast

def tokenize_code(code_string):

tree = ast.parse(code_string)

tokens = []

for node in ast.walk(tree):

if isinstance(node, ast.Name):

tokens.append(node.id)

elif isinstance(node, ast.Str):

tokens.append('STRING')

elif isinstance(node, ast.Num):

tokens.append('NUMBER')

# Add more node types as needed

return tokens

# Example usage

code = """

def greet(name):

print("Hello, " + name + "!")

"""

tokens = tokenize_code(code)

print(tokens)

# Output: ['def', 'greet', 'name', 'print', 'STRING', 'name', 'STRING']

Exploring Diverse Approaches to Code Embedding

Discover three main categories of code embedding methods: Token-Based, Tree-Based, and Graph-Based. Each approach offers unique insights into capturing code semantics and syntax for efficient AI-driven software engineering.

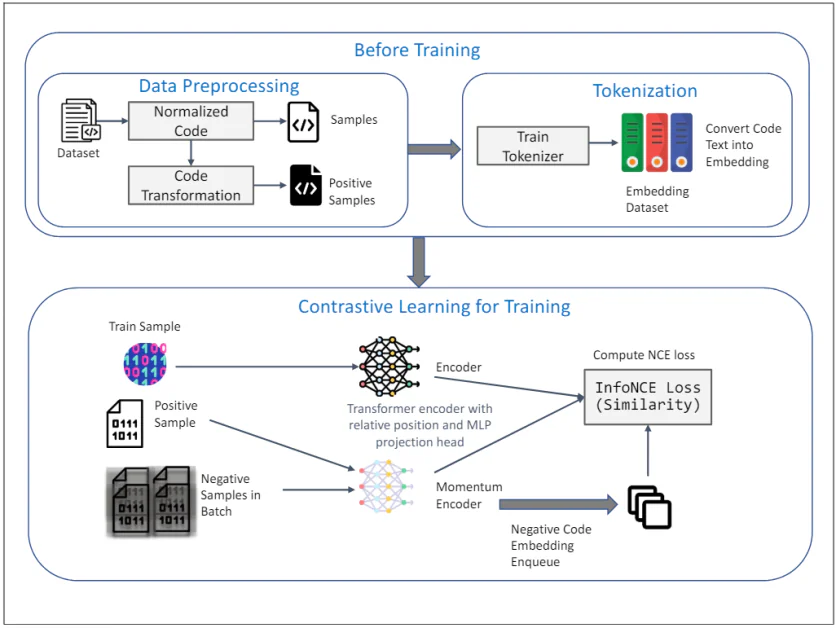

TransformCode: Redefining Code Embedding

TransformCode introduces a new approach to learning code embeddings through contrastive learning. This framework is encoder-agnostic and language-agnostic, offering flexibility and scalability for diverse programming languages.

Unleash the potential of TransformCode for unsupervised learning of code embeddings. Dive into the detailed process of data preprocessing and contrastive learning to craft powerful code representations.

Applications of Code Embeddings

Explore the realms of software engineering empowered by code embeddings. From enhanced code search and completion to automated code correction and cross-lingual processing, code embeddings are reshaping how developers interact with and optimize code.

1. What is code embedding?

Code embedding is the process of converting code snippets or blocks into a format that can be easily shared, displayed, and executed within a document or webpage.

2. How do I embed code in my website or blog?

To embed code in your website or blog, you can use various online services or plugins that offer code embedding functionality. Simply copy and paste your code snippet into the designated area and follow the instructions provided to embed it on your site.

3. Can I customize the appearance of embedded code?

Yes, many code embedding tools allow you to customize the appearance of embedded code, such as changing the font style, size, and color, adding line numbers, and adjusting the background color.

4. Are there any security concerns with code embedding?

While code embedding itself is not inherently unsafe, it is important to be cautious when embedding code from unknown or untrusted sources. Malicious code could potentially be embedded and executed on your website, leading to security vulnerabilities.

5. How can I troubleshoot issues with embedded code?

If you encounter issues with embedded code, such as syntax errors or functionality problems, you can try troubleshooting by double-checking the code for errors, updating the embed code if necessary, and reaching out to the code embedding service provider for support.

Source link