A New AI Coding Challenge Crowned Its First Winner, Setting New Standards for AI Software Engineering

A groundbreaking AI coding competition has unveiled its inaugural champion, raising the benchmark for AI-driven software engineers.

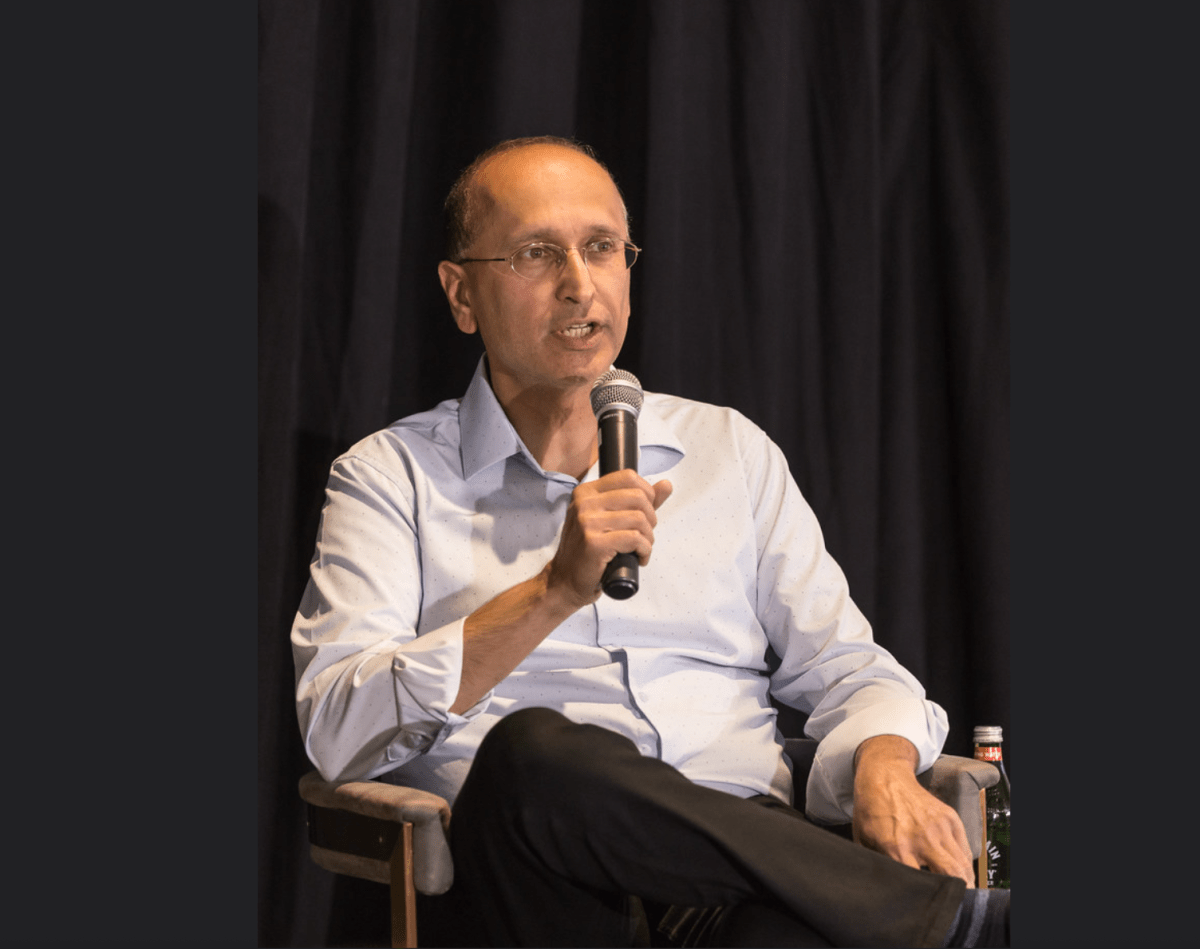

Eduardo Rocha de Andrade Claims the K Prize

On Wednesday at 5 PM PST, the Laude Institute, a nonprofit organization, announced the first winner of the K Prize—a multi-round AI coding challenge initiated by Databricks and Perplexity co-founder Andy Konwinski. The victor, Eduardo Rocha de Andrade, a Brazilian prompt engineer, will take home a prize of $50,000. Surprisingly, he secured the win by answering only 7.5% of the test questions correctly.

A Challenging Benchmark for AI Models

“We’re pleased to have established a benchmark that is genuinely challenging,” Konwinski stated. He emphasized that benchmarks should demand high standards if they are to be meaningful. He further noted, “Scores might differ if the larger labs participated with their top models. But that’s precisely the intention. The K Prize operates offline with limited computational resources, giving preference to smaller, open models. I find that exciting—it levels the playing field.”

Future Incentives for Open-Source Models

Konwinski has committed $1 million to the first open-source model that achieves a score above 90% on the K Prize assessment.

The K Prize’s Unique Approach

Similar to the renowned SWE-Bench system, the K Prize evaluates models based on GitHub issues as a way to assess their ability to tackle real-world programming challenges. However, the K Prize sets itself apart by employing a “contamination-free version of SWE-Bench,” utilizing a timed entry system to prevent any benchmark-specific training. For the initial round, models were due by March 12th, and the organizers constructed the test using only GitHub issues flagged after that date.

A Stark Contrast in Scoring

The 7.5% winning score contrasts sharply with SWE-Bench, which reports a top score of 75% on its easier ‘Verified’ test and 34% on its more challenging ‘Full’ test. While Konwinski remains uncertain if this discrepancy is due to contamination in SWE-Bench or the complexity of gathering new GitHub issues, he anticipates the K Prize will provide clarity soon.

Future Developments and Evolving Standards

“As we conduct more rounds, we’ll gain better insight,” he told TechCrunch, “as we expect competitors to adapt to the evolving landscape every few months.”

Join us at the upcoming TechCrunch event

San Francisco

|

October 27-29, 2025

Addressing AI’s Evaluation Challenges

While it may seem unexpected for AI coding tools to struggle, critics argue that initiatives like the K Prize are vital for addressing AI’s escalating evaluation dilemma.

Advancing Benchmarking Methodologies

“I’m optimistic about developing new tests for existing benchmarks,” says Princeton researcher Sayash Kapoor, who proposed a similar concept in a recent paper. “Without these experiments, we can’t definitively ascertain if the problem lies in contamination or merely targeting the SWE-Bench leaderboard with human input.”

A Reality Check for AI Aspirations

For Konwinski, this challenge is not just about creating a better benchmark—it’s a call to action for the entire industry. “If you listen to the hype, you’d think AI doctors, lawyers, and software engineers should already be here, but that’s simply not the reality,” he asserts. “If we can’t surpass 10% on a contamination-free SWE-Bench, that serves as a stark reality check for me.”

Here are five FAQs about the recent AI coding challenge results:

FAQ 1: What was the AI coding challenge about?

Answer: The AI coding challenge aimed to evaluate the performance and capabilities of advanced AI models in solving complex coding tasks. Participants submitted their solutions, which were then assessed for accuracy, efficiency, and creativity.

FAQ 2: What were the results of the challenge?

Answer: The first results indicated that the AI models struggled significantly with coding tasks. Many submissions lacked the expected quality and often failed to meet the basic requirements of the challenges, highlighting limitations in current AI capabilities.

FAQ 3: What factors contributed to the poor results?

Answer: Several factors contributed to the disappointing outcomes, including ambiguity in problem statements, limitations in the training data, and challenges in understanding nuanced coding concepts. Additionally, the complexity of the tasks might have exceeded the current capabilities of the AI models.

FAQ 4: How will the organizers address the issues highlighted by the results?

Answer: The organizers plan to analyze the submissions in more detail, gathering feedback from participants and experts to improve future challenges. They aim to revise problem statements for clarity and consider introducing more comprehensive training resources for participants.

FAQ 5: What is the outlook for future AI coding challenges?

Answer: While the initial results were discouraging, the outlook remains positive. The organizers believe that with iterative improvements and increased collaboration within the AI community, future challenges can lead to better performance and advancements in AI coding capabilities.