Laude Institute Launches Innovative Slingshots Grants to Propel AI Research

On Thursday, the Laude Institute unveiled its inaugural Slingshots grants, focused on enhancing the development and application of artificial intelligence.

Empowering Researchers with Essential Resources

The Slingshots program serves as an accelerator for researchers, offering vital resources often lacking in traditional academic settings. These include funding, computational power, and product engineering support. In return, grant recipients commit to delivering tangible outcomes—be it a startup, an open-source codebase, or another noteworthy creation.

First Cohort Tackles AI Evaluation Challenges

The program’s initial cohort comprises 15 projects, primarily targeting the intricate issue of AI evaluation. Among the featured initiatives are well-known projects such as Terminal Bench, a command-line coding benchmark, and the evolving ARC-AGI project.

Innovative Solutions from New Projects

Some projects introduce novel strategies to long-standing evaluation issues. For instance, Formula Code, developed by Caltech and UT Austin researchers, aims to assess AI agents’ capacity for optimizing existing code. Meanwhile, BizBench from Columbia proposes a comprehensive benchmark for evaluating “white-collar AI agents.” Additional grants are dedicated to exploring new frameworks for reinforcement learning and model compression.

Dynamic Competition: The CodeClash Initiative

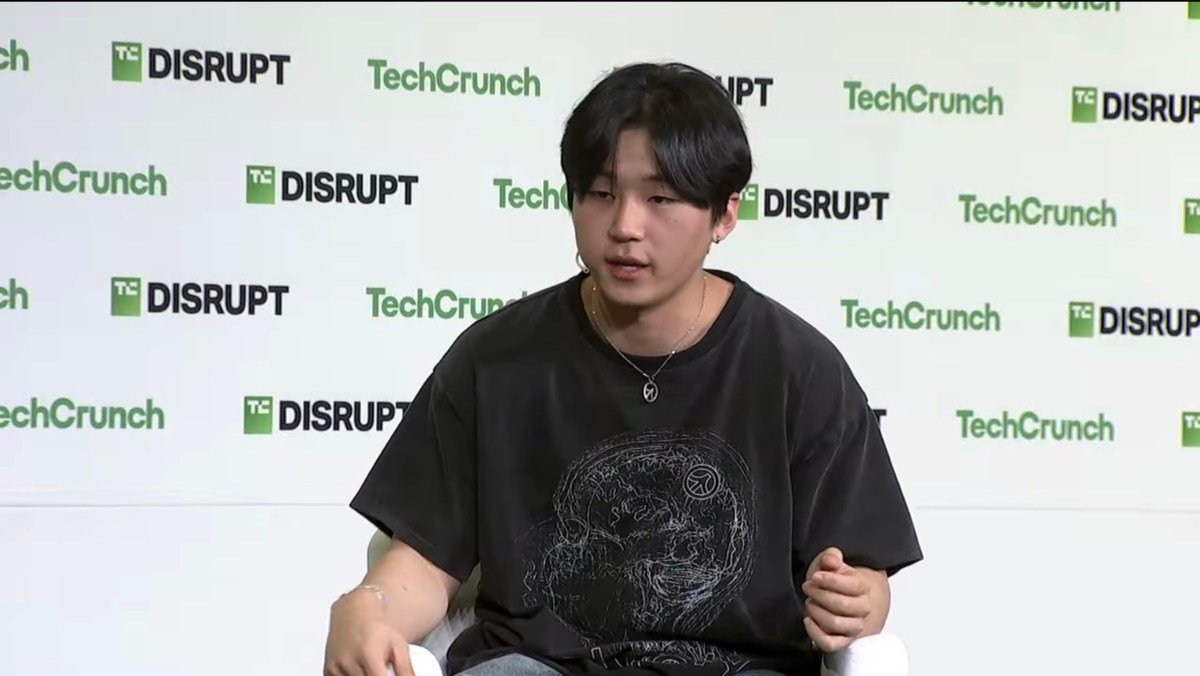

John Boda Yang, co-founder of SWE-Bench, is leading a new initiative called CodeClash as part of this cohort. Drawing inspiration from SWE-Bench’s success, CodeClash aims to evaluate code through an engaging, competition-focused framework.

Insights from Industry Experts

“I believe that ongoing evaluations based on core third-party benchmarks drive progress,” Yang shared with TechCrunch. “My concern is the potential future where benchmarks become too tailored to specific companies.”

Here are five FAQs regarding the Laude Institute’s announcement of the first batch of "Slingshots" AI grants:

FAQ 1: What are the "Slingshots" AI grants?

Answer: The "Slingshots" AI grants are funding opportunities offered by the Laude Institute aimed at supporting innovative projects that leverage artificial intelligence. These grants are designed to promote groundbreaking research and development in the AI sector.

FAQ 2: Who is eligible to apply for these grants?

Answer: Eligibility for the "Slingshots" AI grants typically includes researchers, academics, startups, and organizations focused on AI initiatives. Specific eligibility criteria may vary, so it’s essential for potential applicants to review the guidelines provided by the Laude Institute.

FAQ 3: How much funding is available through the "Slingshots" AI grants?

Answer: While the exact amount of funding may vary by project, the "Slingshots" AI grants offer significant financial support to selected projects. Interested applicants can find detailed information on the funding range in the grant guidelines available on the Laude Institute’s website.

FAQ 4: What types of projects are prioritized for funding?

Answer: The "Slingshots" AI grants prioritize projects that demonstrate innovative uses of artificial intelligence, including but not limited to machine learning applications, AI in healthcare, environmental sustainability, and solutions addressing societal challenges. Projects that align with these themes are encouraged to apply.

FAQ 5: How can interested applicants apply for the grants?

Answer: Interested applicants can apply for the "Slingshots" AI grants by visiting the Laude Institute’s official website. There, they will find the application form and guidelines, including deadlines and submission requirements. It’s recommended to prepare a comprehensive proposal outlining the project’s goals, methodology, and expected impact.