The Rise of Deepfakes: A Dangerous Game of Deception

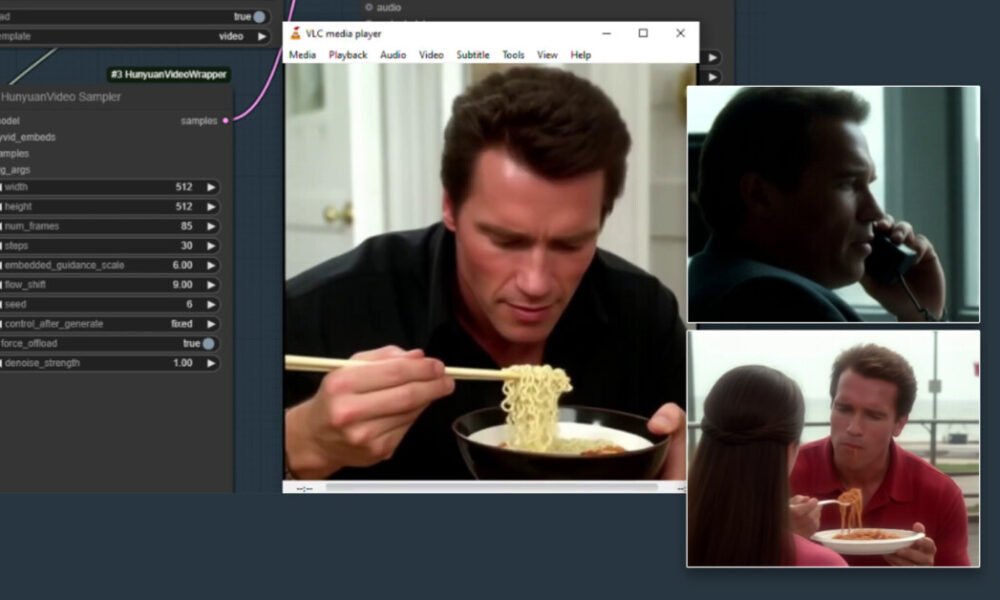

In a world where technology advances rapidly, deepfakes have emerged as a controversial and potentially dangerous innovation. These hyperrealistic digital forgeries, created using sophisticated Artificial Intelligence (AI) techniques like Generative Adversarial Networks (GANs), have the ability to mimic real-life appearances and movements with eerie accuracy.

Initially a niche application, deepfakes have quickly gained traction, blurring the line between reality and fiction. While the entertainment industry utilizes deepfakes for visual effects and creative storytelling, the darker implications are concerning. Hyperrealistic deepfakes have the potential to undermine the integrity of information, erode public trust, and disrupt social and political systems. They are becoming tools for spreading misinformation, manipulating political outcomes, and damaging personal reputations.

The Origins and Evolution of Deepfakes

Deepfakes harness advanced AI techniques to create incredibly realistic digital forgeries. By training neural networks on vast datasets of images and videos, these techniques enable the generation of synthetic media that closely mirrors real-life appearances and movements. The introduction of GANs in 2014 was a significant milestone, allowing for the creation of more sophisticated and hyperrealistic deepfakes.

GANs consist of two neural networks, the generator and the discriminator, working in tandem. The generator produces fake images, while the discriminator attempts to differentiate between real and fake images. Through this adversarial process, both networks improve, resulting in the creation of highly realistic synthetic media.

Recent advancements in machine learning techniques, such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), have further enhanced the realism of deepfakes. These advancements enable better temporal coherence, making synthesized videos smoother and more consistent over time.

The increase in deepfake quality is mainly attributed to advancements in AI algorithms, expanded training datasets, and enhanced computational power. Deepfakes can now replicate not only facial features and expressions but also intricate details like skin texture, eye movements, and subtle gestures. The availability of extensive high-resolution data, along with powerful GPUs and cloud computing, has accelerated the development of hyperrealistic deepfakes.

The Dual-Edged Sword of Technology

While the technology behind deepfakes has legitimate applications in entertainment, education, and medicine, its potential for misuse is concerning. Hyperrealistic deepfakes can be weaponized in various ways, including political manipulation, misinformation, cybersecurity threats, and reputation damage.

For example, deepfakes can fabricate false statements or actions by public figures, potentially influencing elections and undermining democratic processes. They can also propagate misinformation, blurring the line between genuine and fake content. Deepfakes can circumvent security systems relying on biometric data, posing a significant threat to personal and organizational security. Moreover, individuals and organizations can suffer significant harm from deepfakes depicting them in compromising or defamatory situations.

Real-World Impact and Psychological Consequences

Several prominent cases have demonstrated the potential harm from hyperrealistic deepfakes. The deepfake video created by filmmaker Jordan Peele, featuring former President Barack Obama making derogatory remarks about Donald Trump, raised awareness about the dangers of deepfakes and how they can spread disinformation.

Likewise, a deepfake video depicting Mark Zuckerberg boasting about data control highlighted the critique of tech giants and their power dynamics. While not a deepfake, the 2019 Nancy Pelosi video illustrated how easily misleading content can be spread and the potential repercussions. In 2021, a series of deepfake videos showcasing actor Tom Cruise went viral on TikTok, showcasing the ability of hyperrealistic deepfakes to capture public attention and go viral. These instances underscore the psychological and societal implications of deepfakes, including distrust in digital media and heightened polarization and conflict.

Psychological and Societal Implications

Beyond immediate threats to individuals and institutions, hyperrealistic deepfakes have broader psychological and societal implications. Distrust in digital media can lead to the “liar’s dividend,” where the mere possibility of content being fake can dismiss genuine evidence.

As deepfakes become more prevalent, public trust in media sources may decline. People may grow skeptical of all digital content, undermining the credibility of legitimate news organizations. This distrust can exacerbate societal divisions and polarize communities, making constructive dialogue and problem-solving more challenging.

Additionally, misinformation and fake news, amplified by deepfakes, can deepen existing societal divides, leading to increased polarization and conflict. This can impede communities from coming together to address shared challenges.

Legal and Ethical Challenges

The rise of hyperrealistic deepfakes presents new challenges for legal systems worldwide. Legislators and law enforcement agencies must define and regulate digital forgeries, balancing security needs with protection of free speech and privacy rights.

Developing effective legislation to combat deepfakes is intricate. Laws must be precise enough to target malicious actors without hindering innovation or infringing on free speech. This necessitates thoughtful deliberation and collaboration among legal experts, technologists, and policymakers. For instance, the United States enacted the DEEPFAKES Accountability Act, criminalizing the creation or distribution of deepfakes without disclosing their artificial nature. Similarly, other countries like China and the European Union are crafting strict and comprehensive AI regulations to prevent issues.

Combatting the Deepfake Threat

Addressing the threat of hyperrealistic deepfakes requires a comprehensive approach involving technological, legal, and societal measures.

Technological solutions entail detection algorithms that can identify deepfakes by analyzing discrepancies in lighting, shadows, and facial movements, digital watermarking to verify media authenticity, and blockchain technology to provide a decentralized and immutable media provenance record.

Legal and regulatory measures involve passing laws to address deepfake creation and distribution, and establishing regulatory bodies to monitor and respond to deepfake-related incidents.

Societal and educational initiatives include media literacy programs to help individuals critically evaluate content and public awareness campaigns to educate citizens about deepfakes. Furthermore, collaboration among governments, tech firms, academia, and civil society is vital to effectively combat the deepfake threat.

The Bottom Line

Hyperrealistic deepfakes pose a significant threat to our perception of truth and reality. While they offer exciting possibilities in entertainment and education, their potential for misuse is alarming. A multifaceted approach involving advanced detection technologies, robust legal frameworks, and comprehensive public awareness is essential to combat this threat.

Through fostering collaboration among technologists, policymakers, and society, we can mitigate risks and uphold information integrity in the digital age. It is a collective endeavor to ensure that innovation does not compromise trust and truth.

1. What are hyperrealistic deepfakes?

Hyperrealistic deepfakes are highly convincing digital manipulations of audio and video content, typically using artificial intelligence techniques to manipulate the facial expressions and movements of individuals in order to create realistic but fake videos.

2. How are hyperrealistic deepfakes created?

Hyperrealistic deepfakes are created using advanced computer algorithms that analyze and manipulate video and audio data to create a realistic-looking representation of a person that may not actually exist or may be portraying someone else.

3. What are the potential dangers of hyperrealistic deepfakes?

One major danger of hyperrealistic deepfakes is the potential for spreading misinformation or fake news, as these videos can be used to convincingly manipulate what viewers perceive as reality. This can have serious consequences in politics, journalism, and social media.

4. How can you spot a hyperrealistic deepfake?

Spotting a hyperrealistic deepfake can be difficult, as they are designed to be highly convincing. However, some signs to look out for include inconsistencies in facial movements, unnatural lighting or shadows, and unusual behavior or speech patterns that may not match the person being portrayed.