Meta’s $14.3 Billion Investment in Scale AI Faces Early Challenges

In June, Meta made headlines with its significant $14.3 billion investment in data-labeling company Scale AI, bringing aboard CEO Alexandr Wang and key executives to helm Meta Superintelligence Labs (MSL). However, signs of strain in their partnership are surfacing.

Executive Departures Point to Tension

One notable executive, Ruben Mayer, who was a Senior Vice President at Scale AI, has left Meta after just two months. During his brief tenure, Mayer managed AI data operations teams but was not part of MSL’s core unit, known as TBD Labs, where top researchers from OpenAI have congregated.

Contrarily, Mayer contends he played a vital role in establishing the lab from day one, stating he was content with his experience at Meta. He stressed that he did not report directly to Wang, despite earlier claims implicating otherwise.

Meta Expands Data Labeling Partnerships

In a shift that raises questions about its partnership with Scale AI, sources indicate that TBD Labs is collaborating with several third-party data vendors, including Mercor and Surge, thereby diminishing Scale AI’s pivotal role despite Meta’s substantial investment.

The Growing Need for Quality Data

While traditional crowdsourcing methods powered Scale AI’s early business model, the complexity of modern AI requires data to be labeled by highly specialized professionals. This has positioned competitors like Surge and Mercor to thrive in the evolving landscape.

Meta’s Diverse Vendor Strategy

Despite a spokesperson’s denial of quality concerns regarding Scale AI’s product, the fact that Meta is diversifying its data partners suggests a lack of trust in Scale AI’s offerings. Following Meta’s investment, other major players like OpenAI and Google have also ended their relationships with Scale AI.

Labor Cuts at Scale AI

In response to losing clients, Scale AI reduced its workforce by 200 employees in July, attributing the layoffs to “shifts in market demand.” The company’s new CEO, Jason Droege, indicated a pivot towards government sales as they recently secured a $99 million contract with the U.S. Army.

Uncertain Future for Scale AI within Meta

Speculation looms over whether Meta’s investment aimed to attract Wang’s talent rather than bolster Scale AI as a vital partner. Observations from MSL staff suggest that the executives who transitioned from Scale AI are not heavily involved with the key operations of TBD Labs.

Challenges in Navigating Corporate Structures

Reports indicate an environment of chaos within Meta’s AI unit, with new hires from Scale AI and OpenAI experiencing difficulties aligning with corporate bureaucracy. Many established members of the GenAI team have found their roles significantly diminished.

The Rocky Road of Meta’s AI Ambitions

Despite expectations that the investment would enhance Meta’s AI capabilities, tensions are suggesting otherwise. CEO Mark Zuckerberg has exhibited frustration with the team’s performance, particularly after the lackluster launch of the Llama 4 AI model.

A Drive to Recruit Top Talent

To remedy this, Zuckerberg is aggressively recruiting AI talent from leading firms such as OpenAI, Google DeepMind, and Anthropic, alongside recent acquisitions of voice startups like Play AI and WaveForms AI.

Meta’s Massive Infrastructure Plans

With ambitions high, Meta announced plans for extensive data center developments, including a colossal $50 billion facility named Hyperion in Louisiana, further emphasizing its commitment to AI enhancements.

Continued Departures from the AI Unit

As the situation unfolds, departures from MSL continue, with new AI researchers leaving for various reasons. Meta faces an urgent need to stabilize its operations and retain the talent crucial for their future success.

Upcoming Projects at Meta

MSL is reportedly already at work on its next-generation AI model, aiming for a launch by the end of this year.

Update: This article has been revised to include comments from Mayer, who contacted TechCrunch following publication.

Here are five FAQs regarding the emerging issues in Meta’s partnership with Scale AI:

FAQ 1: What is the nature of the partnership between Meta and Scale AI?

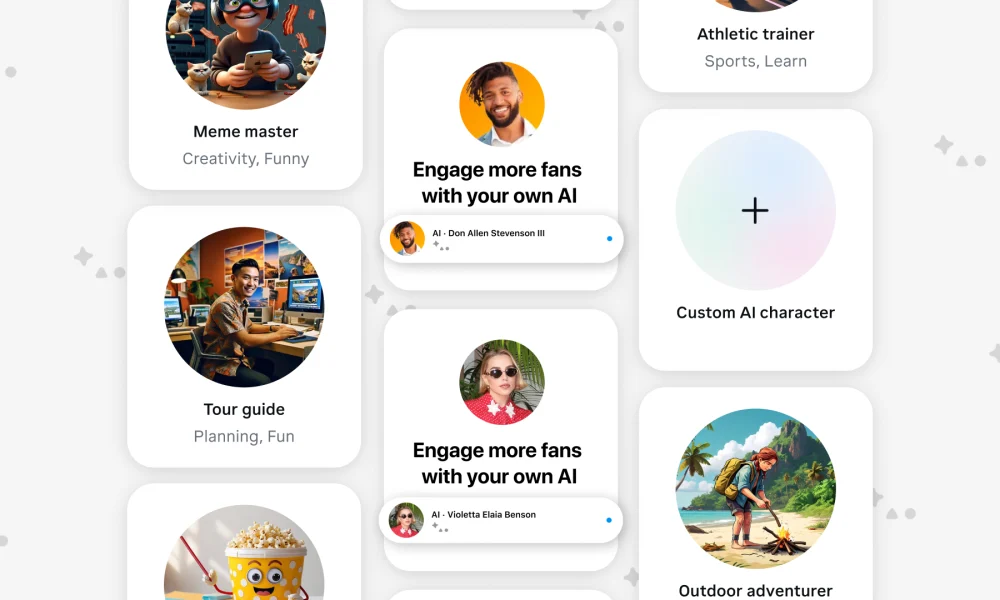

Answer: Meta and Scale AI partnered to enhance AI development, particularly focusing on data annotation and training for machine learning models. This collaboration aims to improve Meta’s products, including social media algorithms and virtual reality experiences.

FAQ 2: What issues are arising in this partnership?

Answer: Recent reports indicate that tensions are developing due to disagreements over project management, intellectual property concerns, and differing priorities regarding how to handle data and AI training methodologies.

FAQ 3: How might these issues impact Meta’s AI projects?

Answer: If these cracks continue to widen, they could lead to delays in AI project rollouts, reduced efficiency in data processing, and potentially lesser quality in AI output, which may adversely affect Meta’s competitive edge in technology.

FAQ 4: What steps are being taken to resolve the partnership issues?

Answer: Both companies are reportedly engaging in discussions to address the concerns, clarifying roles and expectations. They may implement changes in management strategies and revise contracts to better align their objectives.

FAQ 5: What does this mean for the future of AI collaboration in the tech industry?

Answer: This situation highlights the challenges of partnerships in the tech space, especially regarding data sharing and collaboration. It may encourage other companies to carefully evaluate their partnerships and consider more robust communication and contractual agreements to prevent similar issues.