<div id="mvp-content-main">

<h2>Watermarking Tools for AI Image Edits: A Double-Edged Sword</h2>

<p><em><i>New research indicates that watermarking tools designed to prevent AI image alterations may inadvertently facilitate unwanted edits by AI models like Stable Diffusion, enhancing the ease with which these manipulations occur.</i></em></p>

<h3>The Challenge of Protecting Copyrighted Images in AI</h3>

<p>In the realm of computer vision, significant efforts focus on shielding copyrighted images from being incorporated into AI model training or directly edited by AI. Current protective measures aim primarily at <a target="_blank" href="https://www.unite.ai/understanding-diffusion-models-a-deep-dive-into-generative-ai/">Latent Diffusion Models</a> (LDMs), including <a target="_blank" href="https://www.unite.ai/stable-diffusion-3-5-innovations-that-redefine-ai-image-generation/">Stable Diffusion</a> and <a target="_blank" href="https://www.unite.ai/flux-by-black-forest-labs-the-next-leap-in-text-to-image-models-is-it-better-than-midjourney/">Flux</a>. These systems use <a target="_blank" href="https://www.unite.ai/what-is-noise-in-image-processing-a-primer/">noise-based</a> methods for encoding and decoding images.</p>

<h3>Adversarial Noise: A Misguided Solution?</h3>

<p>By introducing adversarial noise into seemingly normal images, researchers have aimed to mislead image detectors, thus preventing AI systems from exploiting copyrighted content. This approach gained traction following an <a target="_blank" href="https://archive.is/1f6Ua">artist backlash</a> against the extensive use of copyrighted material by AI models in 2023.</p>

<h3>Research Findings: Enhanced Exploitability of Protected Images</h3>

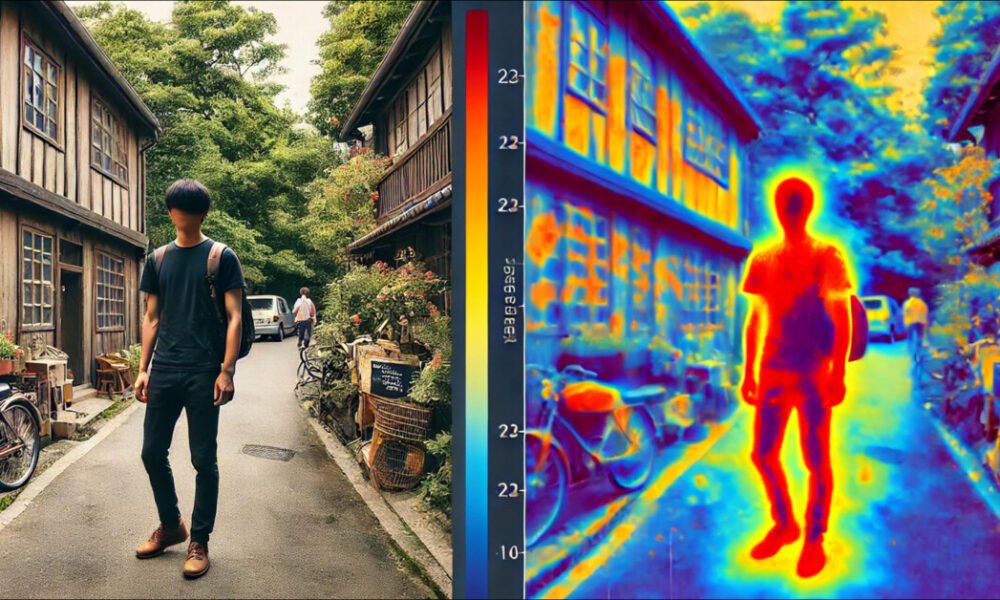

<p>New findings from recent US research reveal a troubling paradox: rather than safeguarding images, perturbation-based methods might actually enhance an AI's ability to exploit these images effectively. The study discovered that:</p>

<blockquote>

<p><em><i>“In various tests on both natural scenes and artwork, we found that protection methods do not fully achieve their intended goal. Conversely, in many cases, diffusion-based editing of protected images results in outputs that closely align with provided prompts.”</i></em></p>

</blockquote>

<h3>A False Sense of Security</h3>

<p>The study emphasizes that popular protection methods may provide a misleading sense of security. The authors assert a critical need for re-evaluation of perturbation-based approaches against more robust methods.</p>

<h3>The Experimentation Process</h3>

<p>The researchers tested three primary protection methods—<a target="_blank" href="https://arxiv.org/pdf/2302.06588">PhotoGuard</a>, <a target="_blank" href="https://arxiv.org/pdf/2305.12683">Mist</a>, and <a target="_blank" href="https://arxiv.org/pdf/2302.04222">Glaze</a>—while applying these methods to both natural scenes and artwork.</p>

<h3>Testing Insights: Where Protection Falls Short</h3>

<p>Through rigorous testing with various AI editing scenarios, the researchers found that instead of hindering AI capabilities, added protections sometimes enhanced their responsiveness to prompts.</p>

<h3>Implications for Artists and Copyright Holders</h3>

<p>For artists concerned about copyright infringement through unauthorized appropriations, this research underscores the limitations of current adversarial techniques. Although intended as protective measures, these systems might unintentionally facilitate exploitation.</p>

<h3>Conclusion: The Path Forward in Copyright Protection</h3>

<p>The study reveals a crucial insight: while adversarial perturbation has been a favored tactic, it may, in fact, exacerbate the issues it intends to address. As existing methods prove ineffective, the quest for more resilient copyright protection strategies becomes paramount.</p>

<p><em><i>First published Monday, June 9, 2025</i></em></p>

</div>This structure optimizes headlines for SEO while maintaining an engaging flow for readers interested in the complexities of AI image protection.

Here are five FAQs based on the topic "Protected Images Are Easier, Not More Difficult, to Steal With AI":

FAQ 1: How does AI make it easier to steal protected images?

Answer: AI tools, especially those used for image recognition and manipulation, can quickly bypass traditional copyright protections. They can identify and replicate images, regardless of watermarks or other safeguards, making protected images more vulnerable.

FAQ 2: What types of AI techniques are used to steal images?

Answer: Common AI techniques include deep learning algorithms for image recognition and generative adversarial networks (GANs). These can analyze, replicate, or create variations of existing images, often making it challenging to track or attribute ownership.

FAQ 3: What are the implications for artists and creators?

Answer: For artists, the enhanced ability of AI to replicate and manipulate images can lead to increased copyright infringement. This undermines their ability to control how their work is used or to earn income from their creations.

FAQ 4: Are there ways to protect images from AI theft?

Answer: While no method is foolproof, strategies include using digital watermarks, employing blockchain for ownership verification, and creating unique, non-reproducible elements within the artwork. However, these methods may not fully prevent AI-based theft.

FAQ 5: What should I do if I find my protected image has been stolen?

Answer: If you discover that your image has been misappropriated, gather evidence of ownership and contact the infringing party, requesting the removal of your content. You can also file a formal complaint with platforms hosting the stolen images and consider legal action if necessary.