Revolutionizing AI Computing: NVIDIA Unveils Rubin Platform and Blackwell Ultra Chip

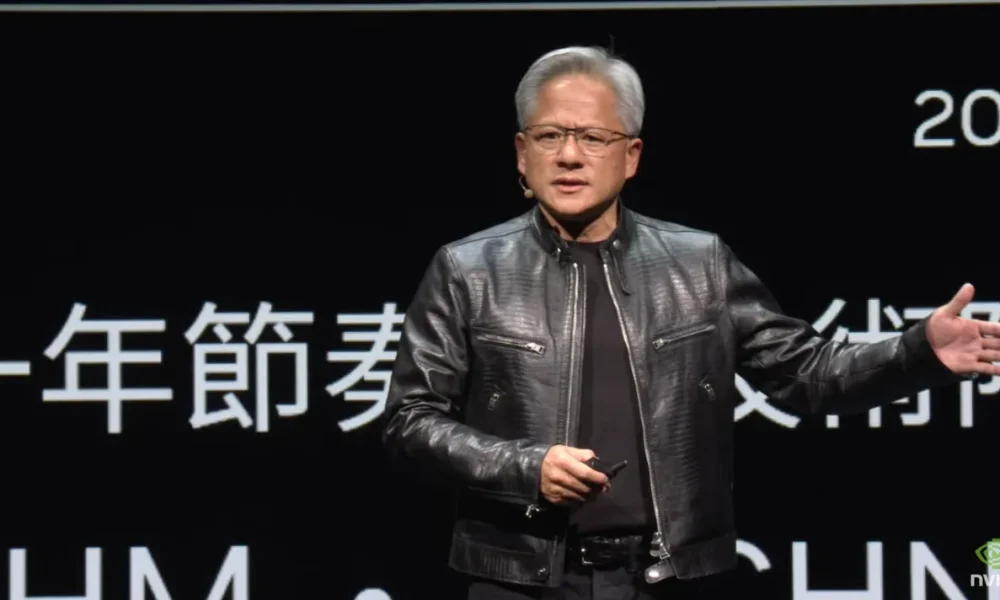

In a groundbreaking announcement at the Computex Conference in Taipei, NVIDIA CEO Jensen Huang revealed the company’s future plans for AI computing. The spotlight was on the Rubin AI chip platform, set to debut in 2026, and the innovative Blackwell Ultra chip, expected in 2025.

The Rubin Platform: A Leap Forward in AI Computing

As the successor to the highly awaited Blackwell architecture, the Rubin Platform marks a significant advancement in NVIDIA’s AI capabilities. Huang emphasized the necessity for accelerated computing to meet the growing demands of data processing, stating, “We are seeing computation inflation.” NVIDIA’s technology promises to deliver an impressive 98% cost savings and a 97% reduction in energy consumption, establishing the company as a frontrunner in the AI chip market.

Although specific details about the Rubin Platform were limited, Huang disclosed that it would feature new GPUs and a central processor named Vera. The platform will also integrate HBM4, the next generation of high-bandwidth memory, which has become a crucial bottleneck in AI accelerator production due to high demand. Leading supplier SK Hynix Inc. is facing shortages of HBM4 through 2025, underscoring the fierce competition for this essential component.

NVIDIA and AMD Leading the Innovation Charge

NVIDIA’s shift to an annual release schedule for its AI chips underscores the escalating competition in the AI chip market. As NVIDIA strives to maintain its leadership position, other industry giants like AMD are also making significant progress. AMD Chair and CEO Lisa Su showcased the growing momentum of the AMD Instinct accelerator family at Computex 2024, unveiling a multi-year roadmap with a focus on leadership AI performance and memory capabilities.

AMD’s roadmap kicks off with the AMD Instinct MI325X accelerator, expected in Q4 2024, boasting industry-leading memory capacity and bandwidth. The company also provided a glimpse into the 5th Gen AMD EPYC processors, codenamed “Turin,” set to leverage the “Zen 5” core and scheduled for the second half of 2024. Looking ahead, AMD plans to launch the AMD Instinct MI400 series in 2026, based on the AMD CDNA “Next” architecture, promising improved performance and efficiency for AI training and inference.

Implications, Potential Impact, and Challenges

The introduction of NVIDIA’s Rubin Platform and the commitment to annual updates for AI accelerators have profound implications for the AI industry. This accelerated pace of innovation will enable more efficient and cost-effective AI solutions, driving advancements across various sectors.

While the Rubin Platform offers immense promise, challenges such as high demand for HBM4 memory and supply constraints from SK Hynix Inc. being sold out through 2025 may impact production and availability. NVIDIA must balance performance, efficiency, and cost to ensure the platform remains accessible and viable for a broad range of customers. Compatibility and seamless integration with existing systems will also be crucial for adoption and user experience.

As the Rubin Platform paves the way for accelerated AI innovation, organizations must prepare to leverage these advancements, driving efficiencies and gaining a competitive edge in their industries.

1. What is the NVIDIA Rubin platform?

The NVIDIA Rubin platform is a next-generation AI chip designed by NVIDIA for advanced artificial intelligence applications.

2. What makes the NVIDIA Rubin platform different from other AI chips?

The NVIDIA Rubin platform boasts industry-leading performance and efficiency, making it ideal for high-performance AI workloads.

3. How can the NVIDIA Rubin platform benefit AI developers?

The NVIDIA Rubin platform offers a powerful and versatile platform for AI development, enabling developers to create more advanced and efficient AI applications.

4. Are there any specific industries or use cases that can benefit from the NVIDIA Rubin platform?

The NVIDIA Rubin platform is well-suited for industries such as healthcare, autonomous vehicles, and robotics, where advanced AI capabilities are crucial.

5. When will the NVIDIA Rubin platform be available for purchase?

NVIDIA has not yet announced a specific release date for the Rubin platform, but it is expected to be available in the near future.

Source link