Revolutionizing Arabic Speech Recognition: CNTXT AI Launches Munsit

In a groundbreaking development for Arabic-language artificial intelligence, CNTXT AI has introduced Munsit, an innovative Arabic speech recognition model. This model is not only the most accurate of its kind but also surpasses major players like OpenAI, Meta, Microsoft, and ElevenLabs in standard benchmarks. Developed in the UAE and designed specifically for Arabic, Munsit is a significant advancement in what CNTXT dubs “sovereign AI”—technological innovation built locally with global standards.

Pioneering Research in Arabic Speech Technology

The scientific principles behind this achievement are detailed in the team’s newly published paper, Advancing Arabic Speech Recognition Through Large-Scale Weakly Supervised Learning. This research introduces a scalable and efficient training method addressing the chronic shortage of labeled Arabic speech data. Utilizing weakly supervised learning, the team has created a system that raises the bar for transcription quality in both Modern Standard Arabic (MSA) and over 25 regional dialects.

Tackling the Data Scarcity Challenge

Arabic, one of the most widely spoken languages worldwide and an official UN language, has long been deemed a low-resource language in speech recognition. This is due to its morphological complexity and the limited availability of extensive, labeled speech datasets. Unlike English, which benefits from abundant transcribed audio data, Arabic’s dialectal diversity and fragmented digital footprint have made it challenging to develop robust automatic speech recognition (ASR) systems.

Instead of waiting for the slow manual transcription process to catch up, CNTXT AI opted for a more scalable solution: weak supervision. By utilizing a massive corpus of over 30,000 hours of unlabeled Arabic audio from various sources, they constructed a high-quality training dataset of 15,000 hours—one of the largest and most representative Arabic speech collections ever compiled.

Innovative Transcription Methodology

This approach did not require human annotation. CNTXT developed a multi-stage system to generate, evaluate, and filter transcriptions from several ASR models. Transcriptions were compared using Levenshtein distance to identify the most consistent results, which were later assessed for grammatical accuracy. Segments that did not meet predefined quality standards were discarded, ensuring that the training data remained reliable even in the absence of human validation. The team continually refined this process, enhancing label accuracy through iterative retraining and feedback loops.

Advanced Technology Behind Munsit: The Conformer Architecture

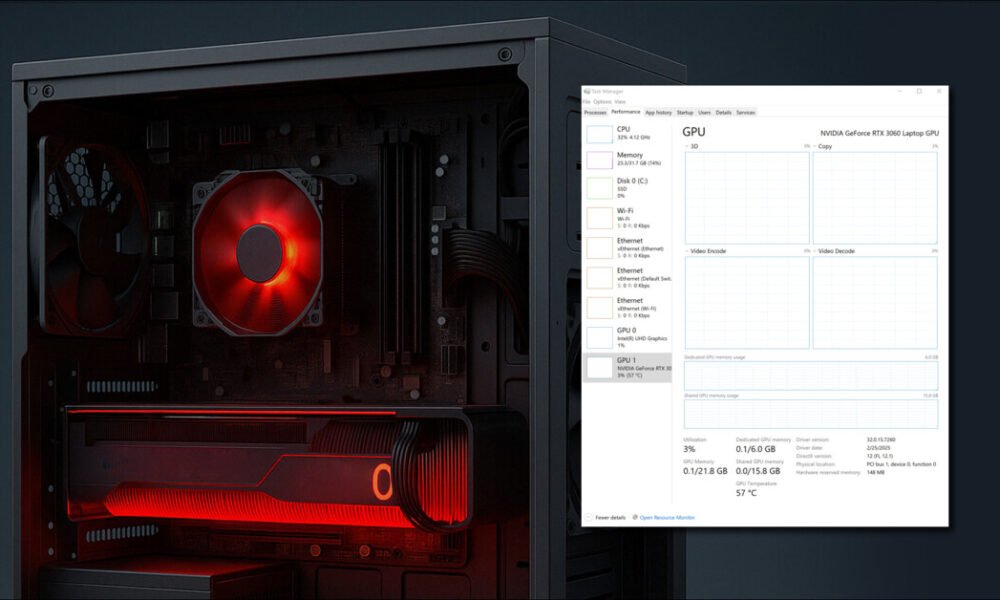

The core of Munsit is the Conformer model, a sophisticated hybrid neural network architecture that melds the benefits of convolutional layers with the global modeling capabilities of transformers. This combination allows the Conformer to adeptly capture spoken language nuances, balancing both long-range dependencies and fine phonetic details.

CNTXT AI implemented an advanced variant of the Conformer, training it from scratch with 80-channel mel-spectrograms as input. The model consists of 18 layers and approximately 121 million parameters, with training conducted on a high-performance cluster utilizing eight NVIDIA A100 GPUs. This enabled efficient processing of large batch sizes and intricate feature spaces. To manage the intricacies of Arabic’s morphology, they employed a custom SentencePiece tokenizer yielding a vocabulary of 1,024 subword units.

Unlike conventional ASR training that pairs each audio clip with meticulously transcribed labels, CNTXT’s strategy relied on weak labels. Though these labels were less precise than human-verified ones, they were optimized through a feedback loop that emphasized consensus, grammatical correctness, and lexical relevance. The model training utilized the Connectionist Temporal Classification (CTC) loss function, ideally suited for the variable timing of spoken language.

Benchmark Dominance of Munsit

The outcomes are impressive. Munsit was tested against leading ASR models on six notable Arabic datasets: SADA, Common Voice 18.0, MASC (clean and noisy), MGB-2, and Casablanca, which encompass a wide array of dialects from across the Arab world.

Across all benchmarks, Munsit-1 achieved an average Word Error Rate (WER) of 26.68 and a Character Error Rate (CER) of 10.05. In contrast, the best-performing version of OpenAI’s Whisper recorded an average WER of 36.86 and CER of 17.21. Even Meta’s SeamlessM4T fell short. Munsit outperformed all other systems in both clean and noisy environments, demonstrating exceptional resilience in challenging conditions—critical in areas like call centers and public services.

The performance gap was equally significant compared to proprietary systems, with Munsit eclipsing Microsoft Azure’s Arabic ASR models, ElevenLabs Scribe, and OpenAI’s GPT-4o transcription feature. These remarkable improvements translate to a 23.19% enhancement in WER and a 24.78% improvement in CER compared to the strongest open baseline, solidifying Munsit as the premier solution in Arabic speech recognition.

Setting the Stage for Arabic Voice AI

While Munsit-1 is already transforming transcription, subtitling, and customer support in Arabic markets, CNTXT AI views this launch as just the beginning. The company envisions a comprehensive suite of Arabic language voice technologies, including text-to-speech, voice assistants, and real-time translation—all anchored in region-specific infrastructure and AI.

“Munsit is more than just a breakthrough in speech recognition,” said Mohammad Abu Sheikh, CEO of CNTXT AI. “It’s a statement that Arabic belongs at the forefront of global AI. We’ve demonstrated that world-class AI doesn’t have to be imported—it can flourish here, in Arabic, for Arabic.”

With the emergence of region-specific models like Munsit, the AI industry enters a new era—one that prioritizes linguistic and cultural relevance alongside technical excellence. With Munsit, CNTXT AI exemplifies the harmony of both.

Here are five frequently asked questions (FAQs) regarding CNTXT AI’s launch of Munsit, the most accurate Arabic speech recognition system:

FAQ 1: What is Munsit?

Answer: Munsit is a cutting-edge Arabic speech recognition system developed by CNTXT AI. It utilizes advanced machine learning algorithms to understand and transcribe spoken Arabic with high accuracy, making it a valuable tool for various applications, including customer service, transcription services, and accessibility solutions.

FAQ 2: How does Munsit improve Arabic speech recognition compared to existing systems?

Answer: Munsit leverages state-of-the-art deep learning techniques and a large, diverse dataset of Arabic spoken language. This enables it to better understand dialects, accents, and contextual nuances, resulting in a higher accuracy rate than previous Arabic speech recognition systems.

FAQ 3: What are the potential applications of Munsit?

Answer: Munsit can be applied in numerous fields, including education, telecommunications, healthcare, and media. It can enhance customer support through voice-operated services, facilitate transcription for media and academic purposes, and support language learning by providing instant feedback.

FAQ 4: Is Munsit compatible with different Arabic dialects?

Answer: Yes, one of Munsit’s distinguishing features is its ability to recognize and process various Arabic dialects, ensuring accurate transcription regardless of regional variations in speech. This makes it robust for users across the Arab world.

FAQ 5: How can businesses integrate Munsit into their systems?

Answer: Businesses can integrate Munsit through CNTXT AI’s API, which provides easy access to the speech recognition capabilities. This allows companies to embed Munsit into their applications, websites, or customer service platforms seamlessly to enhance user experience and efficiency.