<div>

<h2>Silicon Valley Leaders Challenge AI Safety Advocates Amid Growing Controversy</h2>

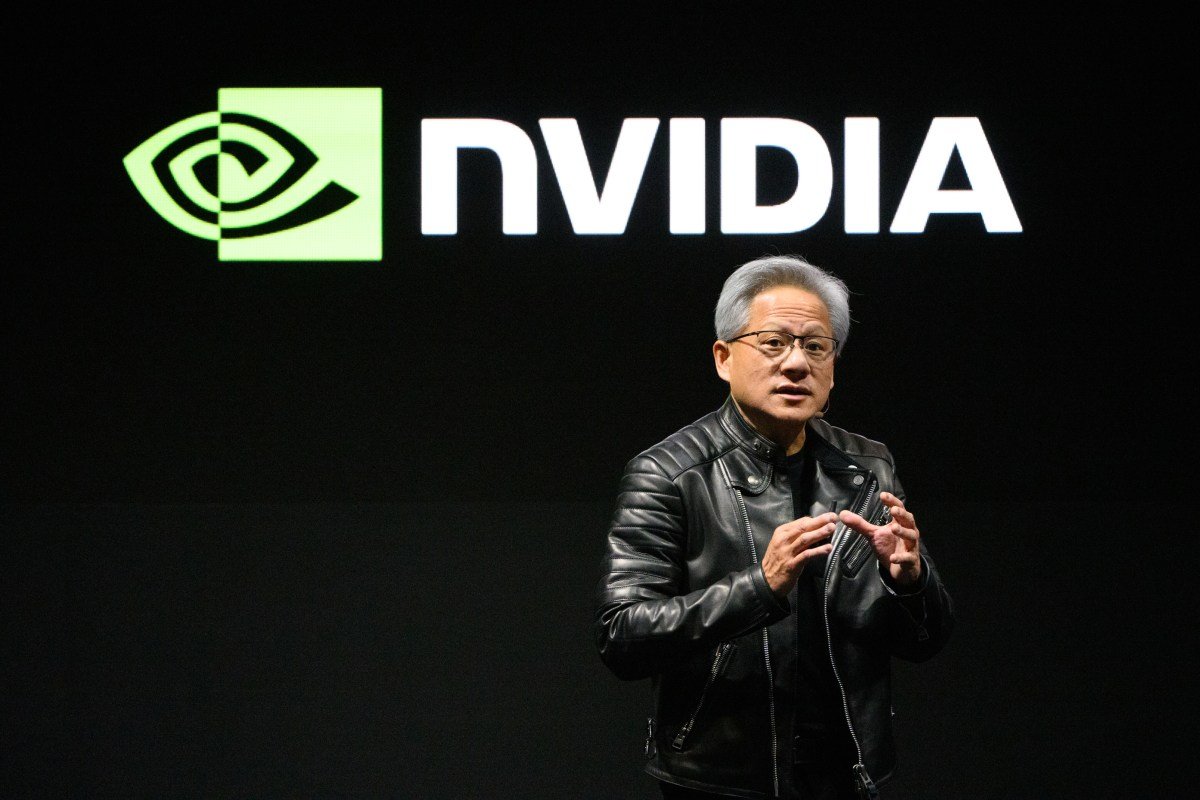

<p id="speakable-summary" class="wp-block-paragraph">This week, prominent figures from Silicon Valley, including White House AI & Crypto Czar David Sacks and OpenAI Chief Strategy Officer Jason Kwon, sparked significant debate with their remarks regarding AI safety advocacy. They insinuated that some advocates are driven by self-interest rather than genuine concern for the public good.</p>

<h3>AI Safety Groups Respond to Accusations</h3>

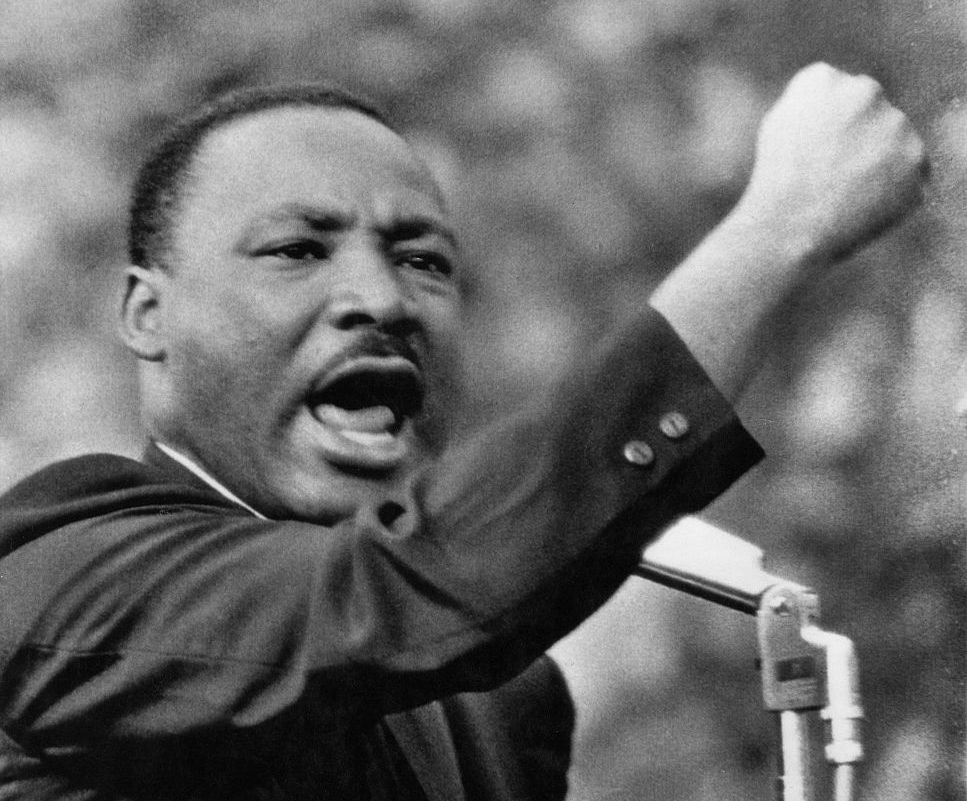

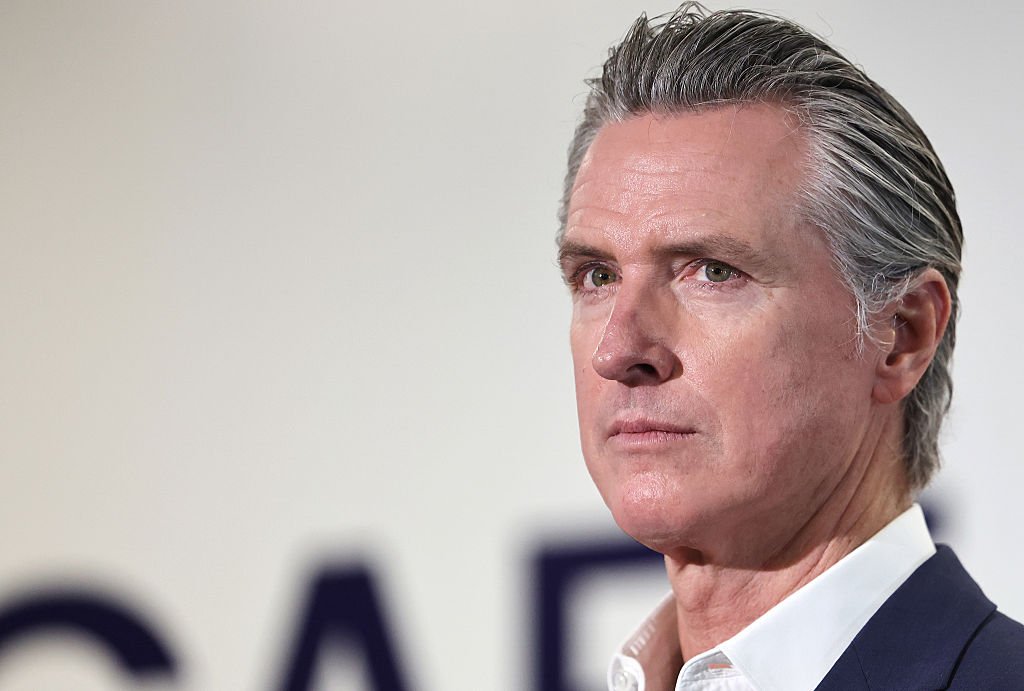

<p class="wp-block-paragraph">In conversations with TechCrunch, representatives from various AI safety organizations claim that the comments made by Sacks and OpenAI mark an ongoing trend in Silicon Valley to intimidate critics. This is not the first instance; last year, certain venture capitalists circulated false rumors that a California AI safety bill would lead to severe penalties for startup founders. Despite the Brookings Institution denouncing these claims as misrepresentations, Governor Gavin Newsom ultimately vetoed the bill.</p>

<h3>Intimidation Tactics Leave Nonprofits Feeling Vulnerable</h3>

<p class="wp-block-paragraph">Whether intentional or not, Sacks and OpenAI's statements have unsettled many advocates within the AI safety community. When approached by TechCrunch, multiple nonprofit leaders requested to remain anonymous, fearing backlash against their organizations.</p>

<h3>A Growing Divide: Responsible AI vs. Consumerism</h3>

<p class="wp-block-paragraph">This situation highlights the escalating conflict in Silicon Valley between responsible AI development and the push for mass consumer products. This week's episode of the <em>Equity</em> podcast delves deeper into these issues, including California's recent AI safety legislation and OpenAI's handling of sensitive content in ChatGPT.</p>

<p>

<iframe loading="lazy" class="tcembed-iframe tcembed--megaphone wp-block-tc23-podcast-player__embed" height="200px" width="100%" frameborder="no" scrolling="no" seamless="" src="https://playlist.megaphone.fm?e=TCML8283045754"></iframe>

</p>

<h3>Accusations of Fearmongering: The Case Against Anthropic</h3>

<p class="wp-block-paragraph">On Tuesday, Sacks took to X to accuse Anthropic of using fear tactics regarding AI risks to advance its interests. He argued that Anthropic was leveraging societal fears around issues like unemployment and cyberattacks to push for regulations that could stifle smaller competitors. Notably, Anthropic was the sole major AI player endorsing California's SB 53, which mandates safety reporting for large companies.</p>

<h3>Reaction to Concern: A Call for Transparency</h3>

<p class="wp-block-paragraph">Sacks’ comments followed a notable essay by Anthropic co-founder Jack Clark, delivered at a recent AI safety conference. Clark expressed genuine concerns regarding AI's potential societal harms, but Sacks portrayed these as calculated efforts to manipulate regulations.</p>

<h3>OpenAI Targets Critics with Subpoenas</h3>

<p class="wp-block-paragraph">This week, Jason Kwon from OpenAI outlined why the company has issued subpoenas to AI safety nonprofits, including Encode, which openly criticized OpenAI’s reorganization following a lawsuit from Elon Musk. Kwon cited concerns over funding and coordination among opposing organizations as reasons for the subpoenas.</p>

<h3>The AI Safety Movement: A Growing Concern for Silicon Valley</h3>

<p class="wp-block-paragraph">Brendan Steinhauser, CEO of Alliance for Secure AI, suggests that OpenAI’s approach is more about silencing criticism than addressing legitimate safety concerns. This sentiment resonates amid a growing apprehension that the AI safety community is becoming more vocal and influential.</p>

<h3>Public Sentiment and AI Anxiety</h3>

<p class="wp-block-paragraph">Recent studies indicate a significant portion of the American population feels more apprehensive than excited about AI technology. Major concerns include job displacement and the risk of deepfakes, yet discussions about catastrophic risks from AI often dominate the safety dialogue.</p>

<h3>Balancing Growth with Responsibility</h3>

<p class="wp-block-paragraph">The ongoing debate suggests a crucial balancing act: addressing safety concerns while sustaining rapid growth in AI development. As the safety movement gathers momentum into 2026, Silicon Valley's defensive strategies may indicate the rising effectiveness of these advocacy efforts.</p>

</div>This rewrite features engaging headers formatted for SEO, presenting an informative overview of the ongoing conflict surrounding AI safety and the dynamics within Silicon Valley.

Here are five FAQs regarding how Silicon Valley spooks AI safety advocates:

FAQ 1: Why are AI safety advocates concerned about developments in Silicon Valley?

Answer: AI safety advocates worry that rapid advancements in AI technology without proper oversight could lead to unintended consequences, such as biased algorithms, potential job displacement, or even existential risks if highly autonomous systems become uncontrollable.

FAQ 2: What specific actions are being taken by companies in Silicon Valley that raise red flags?

Answer: Many companies are prioritizing rapid product development and deployment of AI technologies, often opting for innovation over robustness and safety. This includes releasing AI tools that may not undergo thorough safety evaluations, which can result in high-stakes errors.

FAQ 3: How does the competitive environment in Silicon Valley impact AI safety?

Answer: The intensely competitive atmosphere encourages companies to expedite AI advancements to gain market share. This can lead to shortcuts in safety measures and ethical considerations, as firms prioritize speed and profit over thorough testing and responsible practices.

FAQ 4: What organizations are monitoring AI development in Silicon Valley?

Answer: Various non-profits, academic institutions, and regulatory bodies are actively monitoring AI developments. Organizations like the Partnership on AI and the Future of Humanity Institute advocate for ethical standards and safer AI practices, urging tech companies to adopt responsible methodologies.

FAQ 5: How can AI safety advocates influence change in Silicon Valley?

Answer: AI safety advocates can influence change by raising public awareness, engaging in policy discussions, promoting ethical AI guidelines, and collaborating with tech companies to establish best practices. Advocacy effort through research and public dialogue can encourage more responsible innovation in the field.