Revolutionizing Help Desk Automation: The Rise of AI-Driven Solutions

The help desk automation sector is on the verge of transformation, driven by advanced AI technologies. While established players like Zendesk, ServiceNow, and Freshworks currently lead the market, an array of innovative startups are positioning themselves to disrupt the status quo.

Introducing Risotto: A New Challenger in Help Desk Automation

Risotto is one of those startups gaining momentum. Recently, the company announced a successful $10 million seed funding round, spearheaded by Bonfire Ventures and complemented by contributions from 645 Ventures, Y Combinator, Ritual Capital, and Surgepoint Capital.

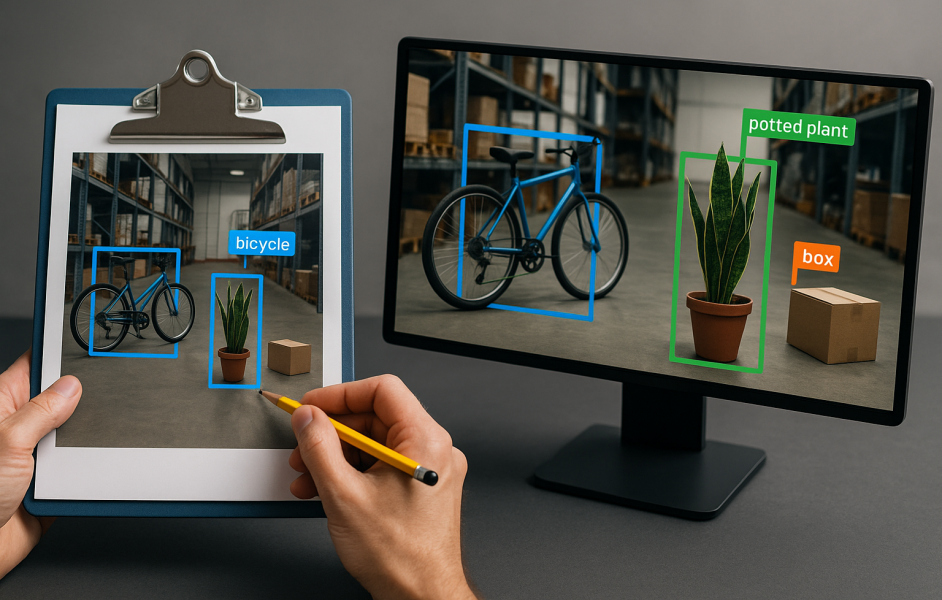

Smart Solutions for Efficient Ticket Management

Risotto’s platform is designed to automate the resolution of help desk inquiries. Positioned between ticket management solutions like Jira and the complex internal tools necessary for ticket resolution, Risotto’s unique infrastructure ensures reliability while utilizing a third-party foundation model. CEO Aron Solberg emphasizes the importance of robust backend systems that mitigate the unpredictability of AI models.

Enhancing AI with Real-World Training

“Our secret weapon lies in our extensive prompt libraries, evaluation suites, and a vast array of real-world scenarios that train the AI to perform reliably,” Solberg shared with TechCrunch.

Proven Impact: Automating Support for Gusto

Collaborating with payroll provider Gusto, Risotto successfully automated 60% of their support tickets. While currently focused on traditional ticketing systems, Risotto is poised for a more significant evolution in the help desk landscape as AI technology reshapes operational paradigms.

A Shift Towards AI-Driven Interfaces

“Although 95% of our clients still rely on conventional methods for ticket resolution, we are witnessing a trend among newer organizations where Large Language Models (LLMs) serve as the primary interface between users and technology,” Solberg noted.

Integrating with ChatGPT: The Future of Ticket Management

This shift suggests that future ticket management may increasingly involve tools like ChatGPT for Enterprise, which could streamline ticketing alongside various professional functions. Risotto’s team is actively developing integrations with platforms like ChatGPT for Enterprise and Gemini, enhancing its capabilities through streamlined MCP connections.

TechCrunch Event

San Francisco

|

October 13-15, 2026

Transforming the Landscape of Help Desk Solutions

If this trend gains traction, it could redefine industry standards. Tools like Risotto would serve a central AI, delivering more focused and dependable services than traditional general-purpose systems. This represents a paradigm shift in SaaS solutions, emphasizing reliability and effective context management over user-friendly interfaces.

Streamlining IT Operations: A Priority for Businesses

For now, Risotto’s primary advantage lies in simplifying the chaotic landscape of various IT systems. Solberg points out, “One of our clients has four full-time employees dedicated solely to managing Jira, and that’s without even integrating AI. Their main task is just handling the platform itself.”

Here are five FAQs about Risotto’s $10M seed funding round to enhance ticketing systems using AI:

FAQ 1: What is Risotto and what problem does it aim to solve?

Answer: Risotto is a technology company focused on developing innovative ticketing systems powered by artificial intelligence. The company aims to streamline the ticket purchasing process, making it simpler and more user-friendly for consumers and event organizers alike.

FAQ 2: How much funding did Risotto raise, and what will it be used for?

Answer: Risotto has successfully raised $10 million in seed funding. This investment will primarily be allocated to further develop their AI-driven ticketing platform, enhance user experience, and expand market outreach.

FAQ 3: How will AI improve ticketing systems according to Risotto?

Answer: Risotto plans to leverage AI to offer personalized recommendations, automate customer service, and optimize pricing strategies. These innovations aim to enhance user satisfaction and increase ticket sales by making the buying process more intuitive and efficient.

FAQ 4: Who are the investors backing Risotto’s seed funding?

Answer: While specific names of investors may vary, the seed funding round includes a mix of venture capital firms and angel investors who specialize in technology and innovative startups, all of whom believe in the potential of AI to transform ticketing systems.

FAQ 5: When can consumers expect to see Risotto’s ticketing solutions in action?

Answer: Risotto is currently in the development phase. While exact timelines may vary, the company anticipates launching its AI-enhanced ticketing system within the next 12 to 18 months, aiming to roll out features gradually to optimize user feedback and experience.