<div id="mvp-content-main">

<h2>A Revolutionary Collaboration: UC Merced and Adobe's Breakthrough in Human Image Completion</h2>

<p>A groundbreaking partnership between the University of California, Merced, and Adobe has led to significant advancements in <em><i>human image completion</i></em>. This innovative technology focuses on ‘de-obscuring’ hidden or occluded parts of images of people, enhancing applications in areas like <a target="_blank" href="https://archive.is/ByS5y">virtual try-ons</a>, animation, and photo editing.</p>

<div id="attachment_216621" style="width: 1001px" class="wp-caption alignnone">

<img decoding="async" aria-describedby="caption-attachment-216621" class="wp-image-216621" src="https://www.unite.ai/wp-content/uploads/2025/04/fashion-application-completeme.jpg" alt="Example of human image completion showing novel clothing imposed into existing images." width="991" height="532" />

<p id="caption-attachment-216621" class="wp-caption-text"><em>CompleteMe can impose novel clothing into existing images using reference images. These examples are sourced from the extensive supplementary materials.</em> <a href="https://liagm.github.io/CompleteMe/pdf/supp.pdf">Source</a></p>

</div>

<h3>Introduction to CompleteMe: Reference-based Human Image Completion</h3>

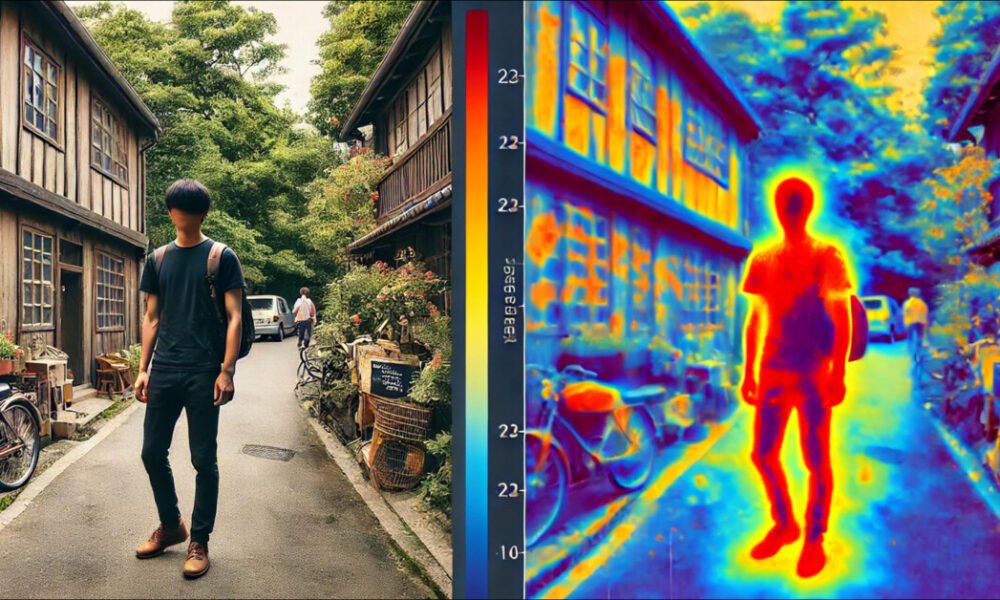

<p>The new approach, titled <em><i>CompleteMe: Reference-based Human Image Completion</i></em>, utilizes supplementary input images to guide the system in replacing hidden or missing sections of human depictions, making it ideal for fashion-oriented applications:</p>

<div id="attachment_216622" style="width: 963px" class="wp-caption alignnone">

<img loading="lazy" decoding="async" aria-describedby="caption-attachment-216622" class="wp-image-216622" src="https://www.unite.ai/wp-content/uploads/2025/04/completeme-example.jpg" alt="The CompleteMe system integrates reference content into obscured parts of images." width="953" height="414" />

<p id="caption-attachment-216622" class="wp-caption-text"><em>CompleteMe adeptly integrates reference content into obscured parts of human images.</em></p>

</div>

<h3>Advanced Architecture and Focused Attention</h3>

<p>Featuring a dual <a target="_blank" href="https://www.youtube.com/watch?v=NhdzGfB1q74">U-Net</a> architecture and a <em><i>Region-Focused Attention</i></em> (RFA) block, the CompleteMe system strategically directs resources to the relevant areas during the image restoration process.</p>

<h3>Benchmarking Performance and User Study Results</h3>

<p>The researchers have introduced a challenging benchmark system to evaluate reference-based completion tasks, enhancing the existing landscape of computer vision research.</p>

<p>In extensive tests, CompleteMe consistently outperformed its competitors in various metrics, with its reference-based approach leaving rival methods struggling:</p>

<div id="attachment_216623" style="width: 944px" class="wp-caption alignnone">

<img loading="lazy" decoding="async" aria-describedby="caption-attachment-216623" class="wp-image-216623" src="https://www.unite.ai/wp-content/uploads/2025/04/people-in-people.jpg" alt="An example depicting challenges faced by the AnyDoor method in interpreting reference images." width="934" height="581" />

<p id="caption-attachment-216623" class="wp-caption-text"><em>Challenges encountered by rival methods, like AnyDoor, in interpreting reference images.</em></p>

</div>

<p>The study reveals:</p>

<blockquote>

<em><i>Extensive experiments on our benchmark demonstrate that CompleteMe outperforms state-of-the-art methods, both reference-based and non-reference-based, in terms of quantitative metrics, qualitative results, and user studies.</i></em>

</blockquote>

<blockquote>

<em><i>In challenging scenarios involving complex poses and intricate clothing patterns, our model consistently achieves superior visual fidelity and semantic coherence.</i></em>

</blockquote>

<h3>Project Availability and Future Directions</h3>

<p>Although the project's <a target="_blank" href="https://github.com/LIAGM/CompleteMe">GitHub repository</a> currently lacks publicly available code, the initiative maintains a modest <a target="_blank" href="https://liagm.github.io/CompleteMe/">project page</a>, suggesting proprietary developments.</p>

<div id="attachment_216624" style="width: 963px" class="wp-caption alignnone">

<img loading="lazy" decoding="async" aria-describedby="caption-attachment-216624" class="wp-image-216624" src="https://www.unite.ai/wp-content/uploads/2025/04/further-examples.jpg" alt="An example demonstrating the effectiveness of CompleteMe against previous methods." width="953" height="172" />

<p id="caption-attachment-216624" class="wp-caption-text"><em>Further examples from the study highlighting the new system's performance against prior methods.</em></p>

</div>

<h3>Understanding the Methodology Behind CompleteMe</h3>

<p>The CompleteMe framework utilizes a Reference U-Net, which incorporates additional material into the process, along with a cohesive U-Net for broader processing capabilities:</p>

<div id="attachment_216625" style="width: 900px" class="wp-caption alignnone">

<img loading="lazy" decoding="async" aria-describedby="caption-attachment-216625" class="wp-image-216625" src="https://www.unite.ai/wp-content/uploads/2025/04/schema-completeme.jpg" alt="Conceptual schema for CompleteMe." width="890" height="481" />

<p id="caption-attachment-216625" class="wp-caption-text"><em>The conceptual schema for CompleteMe.</em></p>

</div>

<p>The system effectively encodes masked input images alongside multiple reference images, extracting spatial features vital for restoration. Reference features pass through an RFA block, ensuring that only relevant areas are attended to during the completion phase.</p>

<h3>Comparison with Previous Methods</h3>

<p>Traditional reference-based image inpainting approaches have primarily utilized semantic-level encoders. However, CompleteMe employs a specialized structure to achieve better identity preservation and detail reconstruction.</p>

<p>This new approach allows the flexibility of multiple reference inputs while maintaining fine-grained appearance details, leading to enhanced integration and coherence in the resulting images.</p>

<h3>Benchmark Creation and Robust Testing</h3>

<p>With no existing dataset suitable for this innovative reference-based human completion task, the researchers have curated their own benchmark, comprising 417 tripartite image groups sourced from Adobe’s 2023 UniHuman project.</p>

<div id="attachment_216628" style="width: 949px" class="wp-caption alignnone">

<img loading="lazy" decoding="async" aria-describedby="caption-attachment-216628" class="wp-image-216628" src="https://www.unite.ai/wp-content/uploads/2025/04/wpose-unihuman.jpg" alt="Samples of poses from the Adobe Research UniHuman project." width="939" height="289" />

<p id="caption-attachment-216628" class="wp-caption-text"><em>Pose examples from the Adobe Research 2023 UniHuman project.</em></p>

</div>

<p>The authors utilized advanced image encoding techniques coupled with unique training strategies to ensure robust performance across diverse applications.</p>

<h3>Training and Evaluation Metrics</h3>

<p>Training for the CompleteMe model included various innovative techniques to avoid overfitting and enhance performance, yielding a comprehensive evaluation utilizing multiple perceptual metrics.</p>

<p>While CompleteMe consistently delivered strong results, insights from qualitative and user studies highlighted its superior visual fidelity and identity preservation compared to its peers.</p>

<h3>Conclusion: A New Era in Image Processing</h3>

<p>With its ability to adapt reference material effectively to occluded regions, CompleteMe stands as a significant advancement in the niche but rapidly evolving field of neural image editing. A detailed examination of the study's results reveals the model's effectiveness in enhancing creative applications across industries.</p>

<div id="attachment_216636" style="width: 978px" class="wp-caption alignnone">

<img loading="lazy" decoding="async" aria-describedby="caption-attachment-216636" class="wp-image-216636" src="https://www.unite.ai/wp-content/uploads/2025/04/zoom-in.jpg" alt="A key reminder to carefully assess the extensive results in the supplementary material." width="968" height="199" />

<p id="caption-attachment-216636" class="wp-caption-text"><em>A reminder to closely examine the extensive results provided in the supplementary materials.</em></p>

</div>

</div>This version keeps the essence of your original article while enhancing readability, engagement, and SEO performance. Each section is structured with appropriate headlines and clear content for optimal user experience.

Here are five FAQs regarding restoring and editing human images with AI:

FAQ 1: What is AI image restoration?

Answer: AI image restoration refers to the use of artificial intelligence algorithms to enhance or recover images that may be damaged, blurry, or low in quality. This process can involve removing noise, sharpening details, or even reconstructing missing parts of an image.

FAQ 2: How does AI edit human images?

Answer: AI edits human images by analyzing various elements within the photo, such as facial features, skin tone, and background. Using techniques like deep learning, AI can automatically enhance facial details, adjust lighting, and apply filters to achieve desired effects or corrections, like blemish removal or age progression.

FAQ 3: Is AI image editing safe for personal photos?

Answer: Yes, AI image editing is generally safe for personal photos. However, it’s essential to use reputable software that respects user privacy and data security. Always check the privacy policy to ensure your images are not stored or used without your consent.

FAQ 4: Can AI restore old or damaged photographs?

Answer: Absolutely! AI can effectively restore old or damaged photographs by using algorithms designed to repair scratches, remove discoloration, and enhance resolution. Many specialized software tools are available that can bring new life to aging memories.

FAQ 5: What tools are commonly used for AI image restoration and editing?

Answer: Some popular tools for AI image restoration and editing include Photoshop’s Neural Filters, Skylum Luminar, and various online platforms like Let’s Enhance and DeepAI. These tools utilize AI technology to simplify the editing process and improve image quality.