Unlocking the Power of Large Language Models: A Deep Dive into Advanced Reasoning Engines

Large language models (LLMs) have rapidly evolved from simple text prediction systems to advanced reasoning engines capable of tackling complex challenges. Initially designed to predict the next word in a sentence, these models can now solve mathematical equations, write functional code, and make data-driven decisions. The key driver behind this transformation is the development of reasoning techniques that enable AI models to process information in a structured and logical manner. This article delves into the reasoning techniques behind leading models like OpenAI’s o3, Grok 3, DeepSeek R1, Google’s Gemini 2.0, and Claude 3.7 Sonnet, highlighting their strengths and comparing their performance, cost, and scalability.

Exploring Reasoning Techniques in Large Language Models

To understand how LLMs reason differently, we need to examine the various reasoning techniques they employ. This section introduces four key reasoning techniques.

- Inference-Time Compute Scaling

This technique enhances a model’s reasoning by allocating extra computational resources during the response generation phase, without changing the model’s core structure or requiring retraining. It allows the model to generate multiple potential answers, evaluate them, and refine its output through additional steps. For example, when solving a complex math problem, the model may break it down into smaller parts and work through each sequentially. This approach is beneficial for tasks that demand deep, deliberate thought, such as logical puzzles or coding challenges. While it improves response accuracy, it also leads to higher runtime costs and slower response times, making it suitable for applications where precision is prioritized over speed. - Pure Reinforcement Learning (RL)

In this technique, the model is trained to reason through trial and error, rewarding correct answers and penalizing mistakes. The model interacts with an environment—such as a set of problems or tasks—and learns by adjusting its strategies based on feedback. For instance, when tasked with writing code, the model might test various solutions and receive a reward if the code executes successfully. This approach mimics how a person learns a game through practice, enabling the model to adapt to new challenges over time. However, pure RL can be computationally demanding and occasionally unstable, as the model may discover shortcuts that do not reflect true understanding. - Pure Supervised Fine-Tuning (SFT)

This method enhances reasoning by training the model solely on high-quality labeled datasets, often created by humans or stronger models. The model learns to replicate correct reasoning patterns from these examples, making it efficient and stable. For example, to enhance its ability to solve equations, the model might study a collection of solved problems and learn to follow the same steps. This approach is straightforward and cost-effective but relies heavily on the quality of the data. If the examples are weak or limited, the model’s performance may suffer, and it could struggle with tasks outside its training scope. Pure SFT is best suited for well-defined problems where clear, reliable examples are available. - Reinforcement Learning with Supervised Fine-Tuning (RL+SFT)

This approach combines the stability of supervised fine-tuning with the adaptability of reinforcement learning. Models undergo supervised training on labeled datasets, establishing a solid foundation of knowledge. Subsequently, reinforcement learning helps to refine the model’s problem-solving skills. This hybrid method balances stability and adaptability, offering effective solutions for complex tasks while mitigating the risk of erratic behavior. However, it requires more resources than pure supervised fine-tuning.

Examining Reasoning Approaches in Leading LLMs

Now, let’s analyze how these reasoning techniques are utilized in the top LLMs, including OpenAI’s o3, Grok 3, DeepSeek R1, Google’s Gemini 2.0, and Claude 3.7 Sonnet.

- OpenAI’s o3

OpenAI’s o3 primarily leverages Inference-Time Compute Scaling to enhance its reasoning abilities. By dedicating extra computational resources during response generation, o3 delivers highly accurate results on complex tasks such as advanced mathematics and coding. This approach allows o3 to excel on benchmarks like the ARC-AGI test. However, this comes at the cost of higher inference costs and slower response times, making it best suited for precision-critical applications like research or technical problem-solving. - xAI’s Grok 3

Grok 3, developed by xAI, combines Inference-Time Compute Scaling with specialized hardware, such as co-processors for tasks like symbolic mathematical manipulation. This unique architecture enables Grok 3 to process large volumes of data quickly and accurately, making it highly effective for real-time applications like financial analysis and live data processing. While Grok 3 offers rapid performance, its high computational demands can drive up costs. It excels in environments where speed and accuracy are paramount. - DeepSeek R1

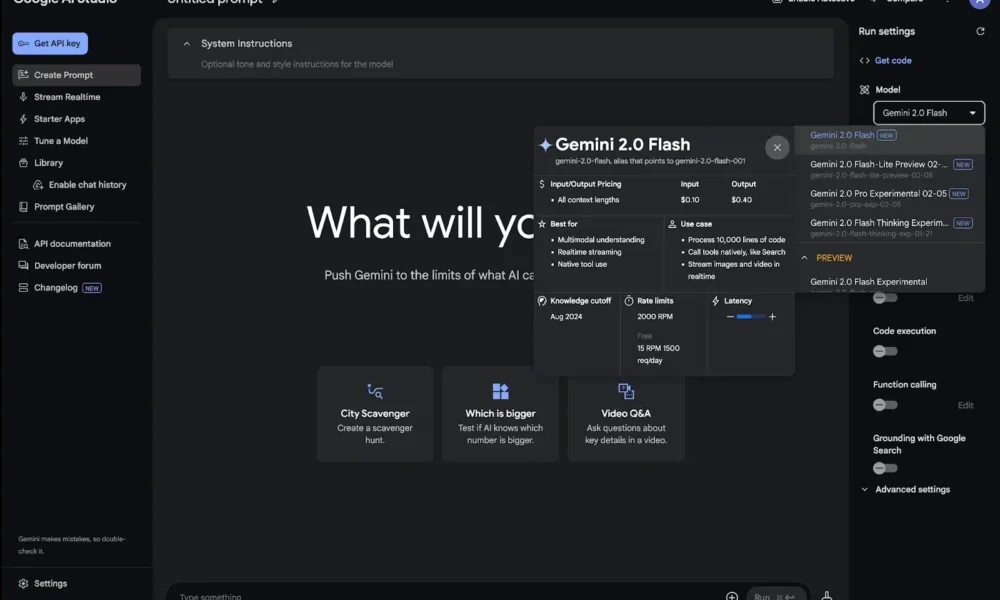

DeepSeek R1 initially utilizes Pure Reinforcement Learning to train its model, enabling it to develop independent problem-solving strategies through trial and error. This makes DeepSeek R1 adaptable and capable of handling unfamiliar tasks, such as complex math or coding challenges. However, Pure RL can result in unpredictable outputs, so DeepSeek R1 incorporates Supervised Fine-Tuning in later stages to enhance consistency and coherence. This hybrid approach makes DeepSeek R1 a cost-effective choice for applications that prioritize flexibility over polished responses. - Google’s Gemini 2.0

Google’s Gemini 2.0 employs a hybrid approach, likely combining Inference-Time Compute Scaling with Reinforcement Learning, to enhance its reasoning capabilities. This model is designed to handle multimodal inputs, such as text, images, and audio, while excelling in real-time reasoning tasks. Its ability to process information before responding ensures high accuracy, particularly in complex queries. However, like other models using inference-time scaling, Gemini 2.0 can be costly to operate. It is ideal for applications that necessitate reasoning and multimodal understanding, such as interactive assistants or data analysis tools. - Anthropic’s Claude 3.7 Sonnet

Claude 3.7 Sonnet from Anthropic integrates Inference-Time Compute Scaling with a focus on safety and alignment. This enables the model to perform well in tasks that require both accuracy and explainability, such as financial analysis or legal document review. Its “extended thinking” mode allows it to adjust its reasoning efforts, making it versatile for quick and in-depth problem-solving. While it offers flexibility, users must manage the trade-off between response time and depth of reasoning. Claude 3.7 Sonnet is especially suited for regulated industries where transparency and reliability are crucial.

The Future of Advanced AI Reasoning

The evolution from basic language models to sophisticated reasoning systems signifies a significant advancement in AI technology. By utilizing techniques like Inference-Time Compute Scaling, Pure Reinforcement Learning, RL+SFT, and Pure SFT, models such as OpenAI’s o3, Grok 3, DeepSeek R1, Google’s Gemini 2.0, and Claude 3.7 Sonnet have enhanced their abilities to solve complex real-world problems. Each model’s reasoning approach defines its strengths, from deliberate problem-solving to cost-effective flexibility. As these models continue to progress, they will unlock new possibilities for AI, making it an even more powerful tool for addressing real-world challenges.

-

How does OpenAI’s o3 differ from Grok 3 in their reasoning approaches?

OpenAI’s o3 focuses on deep neural network models for reasoning, whereas Grok 3 utilizes a more symbolic approach, relying on logic and rules for reasoning. -

What sets DeepSeek R1 apart from Gemini 2.0 in terms of reasoning approaches?

DeepSeek R1 employs a probabilistic reasoning approach, considering uncertainty and making decisions based on probabilities, while Gemini 2.0 utilizes a Bayesian reasoning approach, combining prior knowledge with observed data for reasoning. -

How does Claude 3.7 differ from OpenAI’s o3 in their reasoning approaches?

Claude 3.7 utilizes a hybrid reasoning approach, combining neural networks with symbolic reasoning, to better handle complex and abstract concepts, whereas OpenAI’s o3 primarily relies on neural network models for reasoning. -

What distinguishes Grok 3 from DeepSeek R1 in their reasoning approaches?

Grok 3 is known for its explainable reasoning approach, providing clear and transparent explanations for its decision-making process, while DeepSeek R1 focuses on probabilistic reasoning, considering uncertainties in data for making decisions. - How does Gemini 2.0 differ from Claude 3.7 in their reasoning approaches?

Gemini 2.0 employs a relational reasoning approach, focusing on how different entities interact and relate to each other in a system, while Claude 3.7 utilizes a hybrid reasoning approach, combining neural networks with symbolic reasoning for handling complex concepts.