Data-Centric AI: The Backbone of Innovation

Artificial Intelligence (AI) has revolutionized industries, streamlining processes and increasing efficiency. The cornerstone of AI success lies in the quality of training data used. Accurate data labeling is crucial for AI models, traditionally achieved through manual processes.

However, manual labeling is slow, error-prone, and costly. As AI systems handle more complex data types like text, images, videos, and audio, the demand for precise and scalable data labeling solutions grows. ProVision emerges as a cutting-edge platform that automates data synthesis, revolutionizing the way data is prepared for AI training.

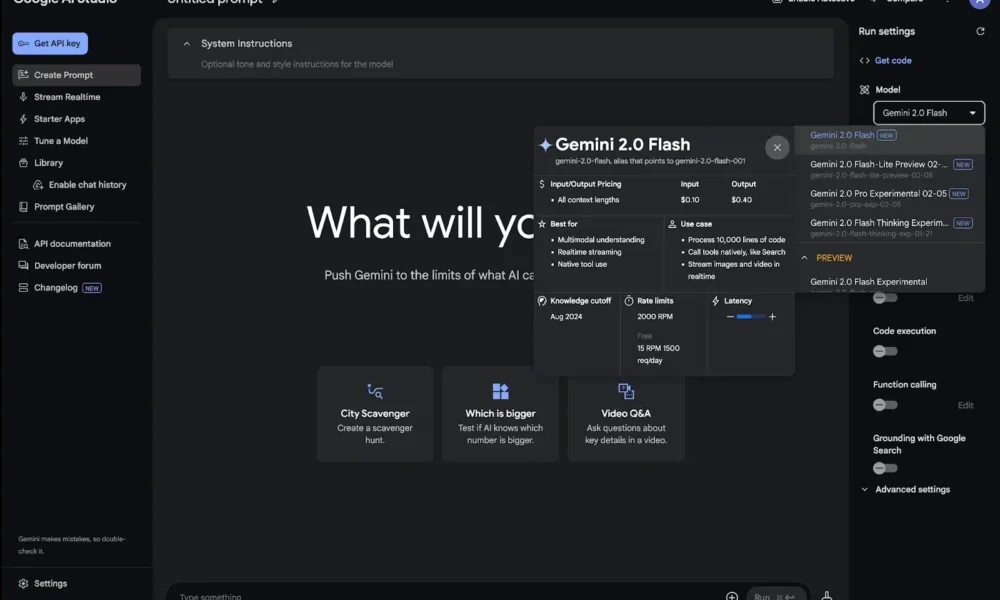

The Rise of Multimodal AI: Unleashing New Capabilities

Multimodal AI systems analyze diverse data forms to provide comprehensive insights and predictions. These systems, mimicking human perception, combine inputs like text, images, sound, and video to understand complex contexts. In healthcare, AI analyzes medical images and patient histories for accurate diagnoses, while virtual assistants interpret text and voice commands for seamless interactions.

The demand for multimodal AI is surging as industries harness diverse data. Integrating and synchronizing data from various modalities presents challenges due to the significant volumes of annotated data required. Manual labeling struggles with the time-intensive and costly process, leading to bottlenecks in scaling AI initiatives.

ProVision offers a solution with its advanced automation capabilities, catering to industries like healthcare, retail, and autonomous driving by providing high-quality labeled datasets.

Revolutionizing Data Synthesis with ProVision

ProVision is a scalable framework that automatizes the labeling and synthesis of datasets for AI systems, overcoming the limitations of manual labeling. By utilizing scene graphs and human-written programs, ProVision efficiently generates high-quality instruction data. With a suite of data generators, ProVision has created over 10 million annotated datasets, enhancing the ProVision-10M dataset.

One of ProVision’s standout features is its scene graph generation pipeline, allowing for automation of scene graph creation in images without prior annotations. This adaptability makes ProVision well-suited for various industries and use cases.

ProVision’s strength lies in its ability to handle diverse data modalities with exceptional accuracy and speed, ensuring seamless integration for coherent analysis. Its scalability benefits industries with substantial data requirements, offering efficient and customizable data synthesis processes.

Benefits of Automated Data Synthesis

Automated data synthesis accelerates the AI training process significantly, reducing the time needed for data preparation and enhancing model deployment. Cost efficiency is another advantage, as ProVision eliminates the resource-intensive nature of manual labeling, making high-quality data annotation accessible to organizations of all sizes.

The quality of data produced by ProVision surpasses manual labeling standards, ensuring accuracy and reliability while scaling to meet increasing demand for labeled data. ProVision’s applications across diverse domains showcase its ability to enhance AI-driven solutions effectively.

ProVision in Action: Transforming Real-World Scenarios

Visual Instruction Data Generation

Enhancing Multimodal AI Performance

Understanding Image Semantics

Automating Question-Answer Data Creation

Facilitating Domain-Specific AI Training

Improving Model Benchmark Performance

Empowering Innovation with ProVision

ProVision revolutionizes AI by automating the creation of multimodal datasets, enabling faster and more accurate outcomes. Through reliability, precision, and adaptability, ProVision drives innovation in AI technology, ensuring a deeper understanding of our complex world.

-

What is ProVision and how does it enhance multimodal AI?

ProVision is a software platform that enhances multimodal AI by automatically synthesizing data from various sources, such as images, videos, and text. This allows AI models to learn from a more diverse and comprehensive dataset, leading to improved performance. -

How does ProVision automate data synthesis?

ProVision uses advanced algorithms to automatically combine and augment data from different sources, creating a more robust dataset for AI training. This automation saves time and ensures that the AI model is exposed to a wide range of inputs. -

Can ProVision be integrated with existing AI systems?

Yes, ProVision is designed to work seamlessly with existing AI systems. It can be easily integrated into your workflow, allowing you to enhance the performance of your AI models without having to start from scratch. -

What are the benefits of using ProVision for data synthesis?

By using ProVision for data synthesis, you can improve the accuracy and robustness of your AI models. The platform allows you to easily scale your dataset and diversify the types of data your AI system is trained on, leading to more reliable results. - How does ProVision compare to manual labeling techniques?

Manual labeling techniques require a significant amount of time and effort to create labeled datasets for AI training. ProVision automates this process, saving you time and resources while also producing more comprehensive and diverse datasets for improved AI performance.