Revolutionizing Creativity: Luma Unveils Luma Agents for Comprehensive AI-Driven Content Creation

AI video-generation startup Luma has just launched Luma Agents, an innovative solution designed to tackle end-to-end creative tasks across text, images, video, and audio. Powered by its Unified Intelligence model family, Luma Agents are based on a single multimodal reasoning system.

Empowering Agencies and Enterprises with Luma Agents

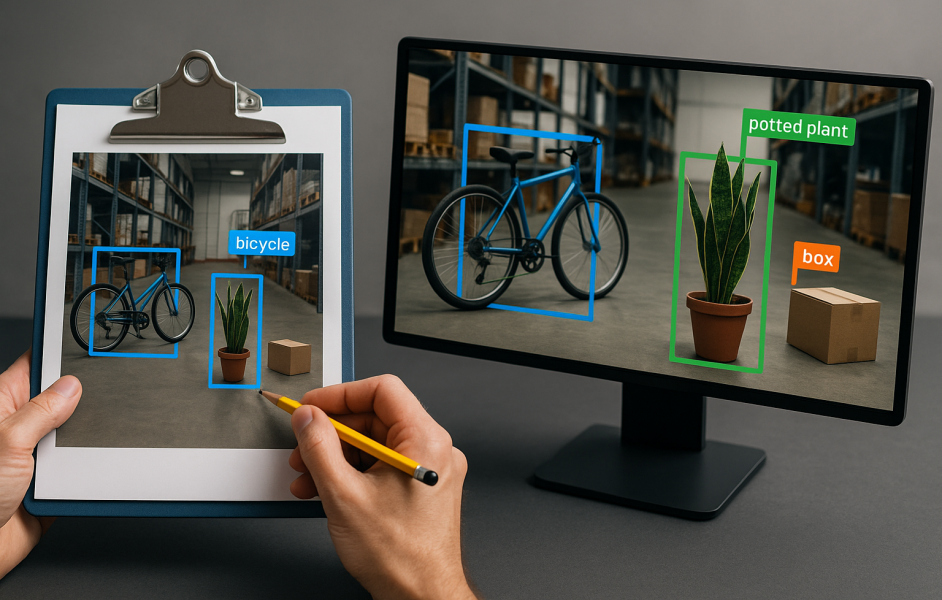

Luma Agents are promoted as a transformative tool for advertising agencies, marketing teams, design studios, and businesses. They boast the capability to plan and generate content across various media formats while seamlessly coordinating with other AI models, including Luma’s Ray 3.14 and Google’s Veo 3, among others.

Uni-1 Model: The Brain Behind Luma Agents

At the core of Luma Agents is the Uni-1 model, the inaugural member of Luma’s Unified Intelligence family. This model has been meticulously trained in audio, video, imagery, language, and spatial reasoning, according to CEO and co-founder Amit Jain.

Jain explained to TechCrunch that Uni-1 is capable of “thinking in language and visualizing in images,” referring to it as “intelligence in pixels.” Future model releases will introduce additional capabilities in audio and video production.

Transforming Business Practices

“Our customers aren’t just acquiring a tool; they’re reinventing their business processes,” Jain stated, emphasizing the paradigm shift Luma Agents represent.

Seamless Collaboration and Iteration

Luma Agents stand out for their ability to maintain consistent context across various assets and collaborators, allowing for continuous improvement of outputs through iterative self-critique. Jain noted that this capability mirrors the successful methodologies employed by coding agents, which enable constant evaluation and refinement.

Current workflows involving AI in creative sectors often fall short of the speed and efficiency expected. Jain described it as “sifting through 100 models and learning how to prompt them” instead of fostering seamless interaction.

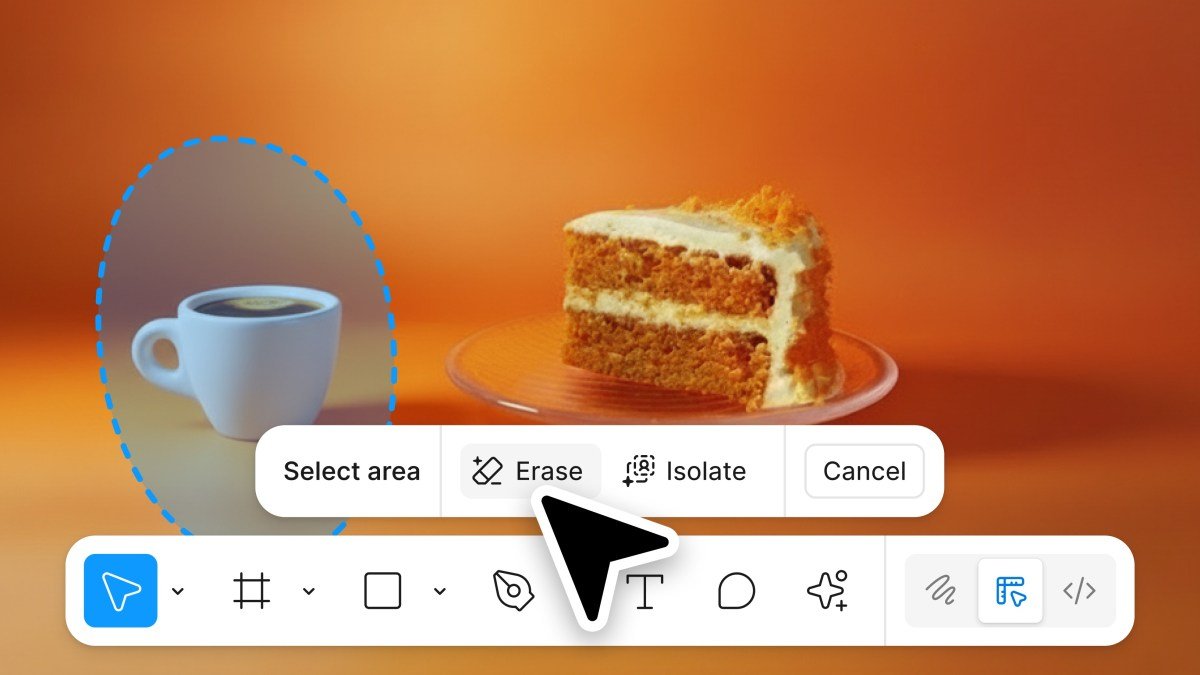

Innovative User Experience

What differentiates Luma Agents is their ability to generate extensive variations without requiring users to prompt back and forth. Users can steer the creative process through dialogue rather than repetitive inputs.

Unified Intelligence: A New Creative Paradigm

Jain likened the functionality of Luma’s system to an architect’s mental representation of a building, asserting that Unified Intelligence allows for holistic end-to-end creative work.

Efficiency in Action

In a demonstration, a 200-word brief along with a product image (like a tube of lipstick) enabled the system to swiftly generate a multitude of concepts for an ad campaign, including locations, models, and color schemes.

In a stunning illustration of efficiency, Luma Agents transformed a $15 million, year-long advertising campaign into localized ads for various countries within 40 hours and under $20,000, all while meeting internal quality controls.

Gradual Rollout for Optimal User Experience

While Luma Agents are now accessible via API, Jain mentioned that access will be gradually rolled out to ensure consistent user availability and to prevent workflow interruptions.

Sure! Here are five FAQs based on Luma’s launch of creative AI agents powered by its new ‘Unified Intelligence’ models:

FAQs

1. What are Luma’s new creative AI agents?

Luma’s creative AI agents are advanced tools designed to assist users in various creative tasks. Powered by the new ‘Unified Intelligence’ models, they can generate content, provide suggestions, and facilitate brainstorming sessions across diverse fields like writing, design, and marketing.

2. How does the ‘Unified Intelligence’ model enhance these AI agents?

The ‘Unified Intelligence’ model integrates multiple AI functionalities, enabling the agents to understand context better, adapt to user preferences, and provide more coherent and relevant outputs. This holistic approach allows for seamless interaction and improved creativity.

3. What types of tasks can Luma’s creative AI agents help with?

These AI agents can assist with a wide range of tasks, including content creation (like writing articles or creating graphics), generating marketing strategies, aiding in product design, and even providing feedback on creative projects, making them versatile tools for professionals and enthusiasts alike.

4. Are Luma’s AI agents customizable for individual needs?

Yes, Luma’s AI agents can be tailored to fit individual user preferences. Users can input specific guidelines, styles, and objectives, allowing the AI to adjust its outputs accordingly and meet unique creative requirements.

5. How can I access Luma’s creative AI agents?

Luma’s creative AI agents will be available through their platform, accessible via subscription or one-time purchase options. Users can sign up on Luma’s website for more information and updates on availability and pricing.