Controversial NVIDIA Driver Update Sparks Concerns in AI and Gaming Communities

NVIDIA Releases Critical Hotfix to Address Temperature Reporting Issue

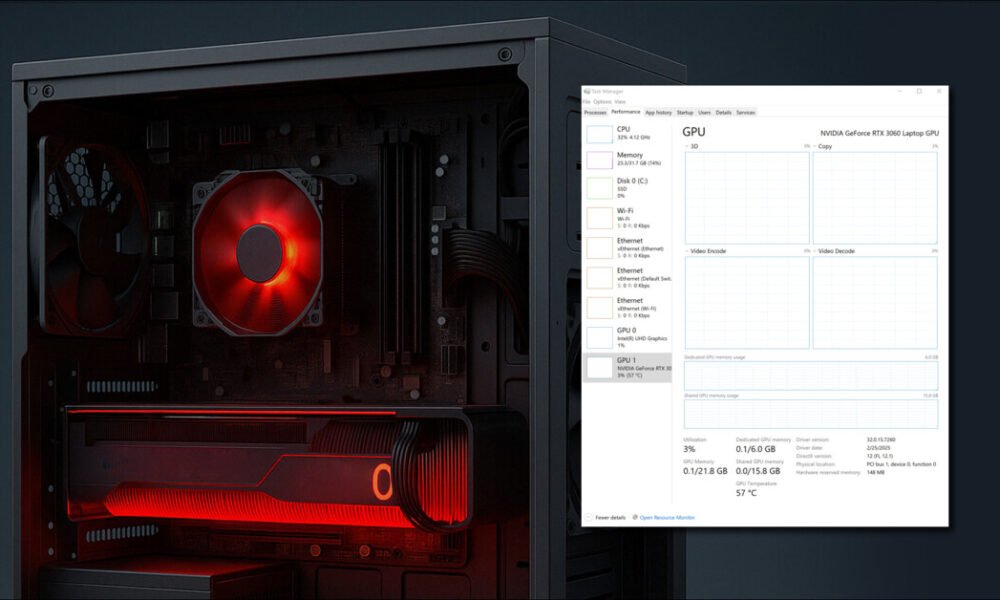

NVIDIA recently released a critical hotfix to address a concerning issue with their driver update that caused systems to falsely report safe GPU temperatures while quietly climbing towards potentially critical levels. The issue, as highlighted in NVIDIA’s official post, revolved around GPU monitoring utilities failing to report accurate temperatures after a PC woke from sleep.

Timeline of Emergent Problems Following Driver Update

Following the rollout of the affected Game Ready driver 576.02, reports started surfacing on forums and Reddit threads, indicating disruptions in fan curve behavior and core thermal regulation. Users reported instances of GPUs idling at high temperatures and overheating under normal operational loads, prompting concerns and complaints.

The Impact of the Faulty Update

The faulty 576.02 driver update had widespread implications, leading to user reports of GPU crashes due to heat buildup, inconsistent temperature readings, and potential damage to system components. The update, while initially offering performance improvements, ultimately caused more harm than good, especially for users engaged in AI workflows relying on high-performance hardware.

Risk Assessment and Damage Control

While NVIDIA has provided a hotfix to address the issue, concerns remain regarding the long-term effects of sustained high temperatures on GPU performance and system stability. Users are advised to monitor their GPU temperatures carefully and consider rolling back to previous driver versions if necessary to prevent potential damage.

Protecting AI Workflows from Heat Damage

AI practitioners face a higher risk of heat damage due to the intensive and consistent workload placed on GPUs during machine learning processes. Proper thermal management and monitoring are crucial to prevent overheating and maintain optimal performance in AI applications.

*This article was first published on Tuesday, April 22, 2025.

Q: What is this NVIDIA hotfix for GPU driver’s overheating issue?

A: This hotfix is a software update released by NVIDIA to address overheating issues reported by users of their GPU drivers.

Q: How do I know if my GPU is affected by the overheating issue?

A: If you notice your GPU reaching higher temperatures than usual or experiencing performance issues, it may be a sign that your GPU is affected by the overheating issue.

Q: How do I download and install the NVIDIA hotfix for the GPU driver’s overheating issue?

A: You can download the hotfix directly from the NVIDIA website or through the GeForce Experience application. Simply follow the instructions provided to install the update on your system.

Q: Will installing the hotfix affect my current settings or data on my GPU?

A: Installing the hotfix should not affect your current settings or data on your GPU. However, it is always recommended to back up important data before making any software updates.

Q: Are there any additional steps I should take to prevent my GPU from overheating in the future?

A: In addition to installing the hotfix, you can also ensure proper ventilation and cooling for your GPU, clean out any dust or debris from your system regularly, and monitor your GPU temperatures using software utilities.

Source link