Revolutionizing Data Annotation: Voxel51’s Game-Changing Auto-Labeling System

A transformative study by the innovative computer vision startup Voxel51 reveals that the conventional data annotation model is on the brink of significant change. Recently published research indicates that their new auto-labeling technology achieves up to 95% accuracy comparable to human annotators while operating at a staggering 5,000 times faster and up to 100,000 times more cost-effective than manual labeling.

The study evaluated leading foundation models such as YOLO-World and Grounding DINO across prominent datasets including COCO, LVIS, BDD100K, and VOC. Remarkably, in practical applications, models trained solely on AI-generated labels often equaled or even surpassed those utilizing human labels. This breakthrough has immense implications for businesses developing computer vision systems, potentially allowing for millions of dollars in annotation savings and shrinking model development timelines from weeks to mere hours.

Shifting Paradigms: From Manual Annotation to Model-Driven Automation

Data annotation has long been a cumbersome obstacle in AI development. From ImageNet to autonomous vehicle datasets, extensive teams have historically been tasked with meticulous bounding box drawing and object segmentation—a process that is both time-consuming and costly.

The traditional wisdom has been straightforward: an abundance of human-labeled data yields better AI outcomes. However, Voxel51’s findings turn that assumption upside down.

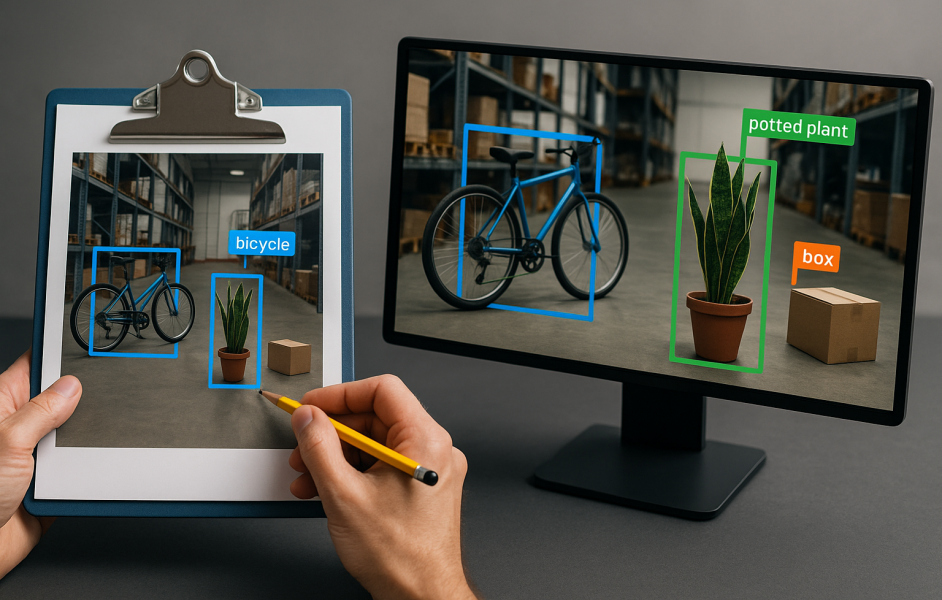

By utilizing pre-trained foundation models, some equipped with zero-shot capabilities, Voxel51 has developed a system that automates standard labeling. The process incorporates active learning to identify complex cases that require human oversight, drastically reducing time and expense.

In a case study, using an NVIDIA L40S GPU, the task of labeling 3.4 million objects took slightly over an hour and cost just $1.18. In stark contrast, a manual approach via AWS SageMaker would demand nearly 7,000 hours and over $124,000. Notably, auto-labeled models occasionally outperformed human counterparts in particularly challenging scenarios—such as pinpointing rare categories in the COCO and LVIS datasets—likely due to the consistent labeling behavior of foundation models trained on a vast array of internet data.

Understanding Voxel51: Pioneers in Visual AI Workflows

Founded in 2016 by Professor Jason Corso and Brian Moore at the University of Michigan, Voxel51 initially focused on video analytics consultancy. Corso, a leader in computer vision, has authored over 150 academic papers and contributes substantial open-source tools to the AI ecosystem. Moore, his former Ph.D. student, currently serves as CEO.

The team shifted focus upon realizing that many AI bottlenecks lay not within model design but within data preparation. This epiphany led to the creation of FiftyOne, a platform aimed at enabling engineers to explore, refine, and optimize visual datasets more effectively.

With over $45M raised—including a $12.5M Series A and a $30M Series B led by Bessemer Venture Partners—the company has seen widespread enterprise adoption, with major players like LG Electronics, Bosch, and Berkshire Grey integrating Voxel51’s solutions into their production AI workflows.

FiftyOne: Evolving from Tool to Comprehensive AI Platform

Originally a simple visualization tool, FiftyOne has developed into a versatile, data-centric AI platform. It accommodates a myriad of formats and labeling schemas, including COCO, Pascal VOC, LVIS, BDD100K, and Open Images, while also seamlessly integrating with frameworks like TensorFlow and PyTorch.

Beyond its visualization capabilities, FiftyOne empowers users to conduct complex tasks such as identifying duplicate images, flagging mislabeled samples, and analyzing model failure modes. Its flexible plugin architecture allows for custom modules dedicated to optical character recognition, video Q&A, and advanced analytical techniques.

The enterprise edition of FiftyOne, known as FiftyOne Teams, caters to collaborative workflows with features like version control, access permissions, and integration with cloud storage solutions (e.g., S3) alongside annotation tools like Labelbox and CVAT. Voxel51 has also partnered with V7 Labs to facilitate smoother transitions between dataset curation and manual annotation.

Rethinking the Annotation Landscape

Voxel51’s auto-labeling insights challenge the foundational concepts of a nearly $1B annotation industry. In traditional processes, human input is mandatory for each image, incurring excessive costs and redundancies. Voxel51 proposes that much of this labor can now be automated.

With their innovative system, most images are labeled by AI, reserving human oversight for edge cases. This hybrid methodology not only minimizes expenses but also enhances overall data quality, ensuring that human expertise is dedicated to the most complex or critical annotations.

This transformative approach resonates with the growing trend in AI toward data-centric AI—a focus on optimizing training data rather than continuously tweaking model architectures.

Competitive Landscape and Industry Impact

Prominent investors like Bessemer perceive Voxel51 as the “data orchestration layer” akin to the transformative impact of DevOps tools on software development. Their open-source offerings have amassed millions of downloads, and a diverse community of developers and machine learning teams engages with their platform globally.

While other startups like Snorkel AI, Roboflow, and Activeloop also focus on data workflows, Voxel51 distinguishes itself through its expansive capabilities, open-source philosophy, and robust enterprise-level infrastructure. Rather than competing with annotation providers, Voxel51’s solutions enhance existing services, improving efficiency through targeted curation.

Future Considerations: The Path Ahead

The long-term consequences of Voxel51’s approach are profound. If widely adopted, Voxel51 could significantly lower the barriers to entry in the computer vision space, democratizing opportunities for startups and researchers who may lack extensive labeling budgets.

This strategy not only reduces costs but also paves the way for continuous learning systems, whereby models actively monitor performance, flagging failures for human review and retraining—all within a streamlined system.

Ultimately, Voxel51 envisions a future where AI evolves not just with smarter models, but with smarter workflows. In this landscape, annotation is not obsolete but is instead a strategic, automated process guided by intelligent oversight.

Here are five FAQs regarding Voxel51’s new auto-labeling technology:

FAQ 1: What is Voxel51’s new auto-labeling technology?

Answer: Voxel51’s new auto-labeling technology utilizes advanced machine learning algorithms to automate the annotation of data. This reduces the time and resources needed for manual labeling, making it significantly more cost-effective.

FAQ 2: How much can annotation costs be reduced with this technology?

Answer: Voxel51 claims that their auto-labeling technology can slash annotation costs by up to 100,000 times. This dramatic reduction enables organizations to allocate resources more efficiently and focus on critical aspects of their projects.

FAQ 3: What types of data can Voxel51’s auto-labeling technology handle?

Answer: The auto-labeling technology is versatile and can handle various types of data, including images, videos, and other multimedia formats. This makes it suitable for a broad range of applications in industries such as healthcare, automotive, and robotics.

FAQ 4: How does the auto-labeling process work?

Answer: The process involves training machine learning models on existing labeled datasets, allowing the technology to learn how to identify and categorize data points automatically. This helps in quickly labeling new data with high accuracy and minimal human intervention.

FAQ 5: Is there any need for human oversight in the auto-labeling process?

Answer: While the technology significantly automates the labeling process, some level of human oversight may still be necessary to ensure quality and accuracy, especially for complex datasets. Organizations can use the technology to reduce manual effort while maintaining control over the final output.