Revolutionizing Robotic Vision: University of Maryland’s Breakthrough Camera System

A team of computer scientists at the University of Maryland has unveiled a groundbreaking camera system that could transform how robots perceive and interact with their surroundings. Inspired by the involuntary movements of the human eye, this technology aims to enhance the clarity and stability of robotic vision.

The Limitations of Current Event Cameras

Event cameras, a novel technology in robotics, excel at tracking moving objects but struggle to capture clear, blur-free images in high-motion scenarios. This limitation poses a significant challenge for robots, self-driving cars, and other technologies reliant on precise visual information for navigation and decision-making.

Learning from Nature: The Human Eye

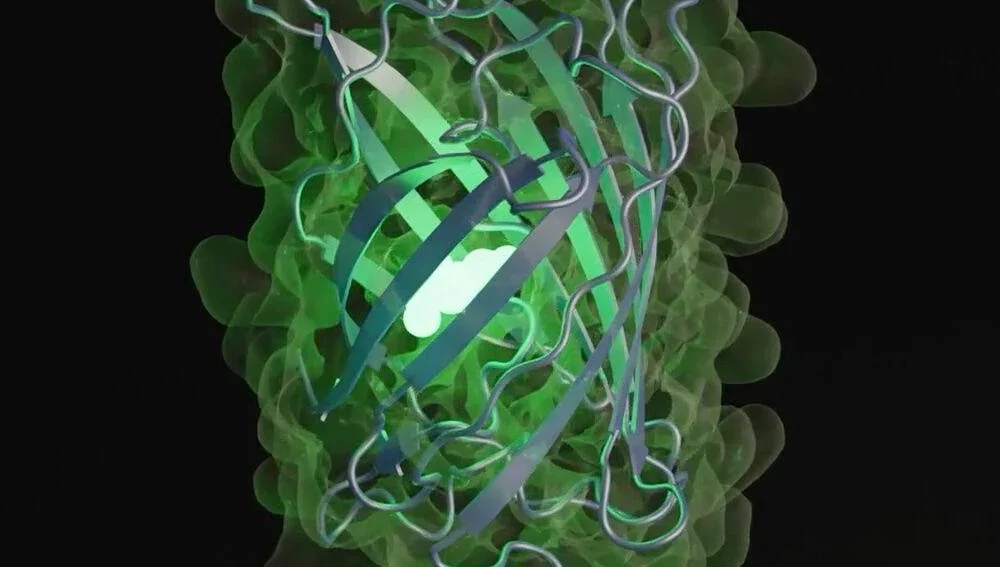

Seeking a solution, the research team turned to the human eye for inspiration, focusing on microsaccades – tiny involuntary eye movements that help maintain focus and perception. By replicating this biological process, they developed the Artificial Microsaccade-Enhanced Event Camera (AMI-EV), enabling robotic vision to achieve stability and clarity akin to human sight.

AMI-EV: Innovating Image Capture

At the heart of the AMI-EV lies its ability to mechanically replicate microsaccades. A rotating prism within the camera simulates the eye’s movements, stabilizing object textures. Complemented by specialized software, the AMI-EV can capture clear, precise images even in highly dynamic situations, addressing a key challenge in current event camera technology.

Potential Applications Across Industries

From robotics and autonomous vehicles to virtual reality and security systems, the AMI-EV’s advanced image capture opens doors for diverse applications. Its high frame rates and superior performance in various lighting conditions make it ideal for enhancing perception, decision-making, and security across industries.

Future Implications and Advantages

The AMI-EV’s ability to capture rapid motion at high frame rates surpasses traditional cameras, offering smooth and realistic depictions. Its superior performance in challenging lighting scenarios makes it invaluable for applications in healthcare, manufacturing, astronomy, and beyond. As the technology evolves, integrating machine learning and miniaturization could further expand its capabilities and applications.

Q: How does the camera system mimic the human eye for enhanced robotic vision?

A: The camera system incorporates multiple lenses and sensors to allow for depth perception and a wide field of view, similar to the human eye.

Q: Can the camera system adapt to different lighting conditions?

A: Yes, the camera system is equipped with advanced algorithms that adjust the exposure and white balance settings to optimize image quality in various lighting environments.

Q: How does the camera system improve object recognition for robots?

A: By mimicking the human eye, the camera system can accurately detect shapes, textures, and colors of objects, allowing robots to better identify and interact with their surroundings.

Q: Is the camera system able to track moving objects in real-time?

A: Yes, the camera system has fast image processing capabilities that enable it to track moving objects with precision, making it ideal for applications such as surveillance and navigation.

Q: Can the camera system be integrated into existing robotic systems?

A: Yes, the camera system is designed to be easily integrated into a variety of robotic platforms, providing enhanced vision capabilities without requiring significant modifications.

Source link