California Takes Bold Step in AI Regulation with New Bill for Chatbot Safety

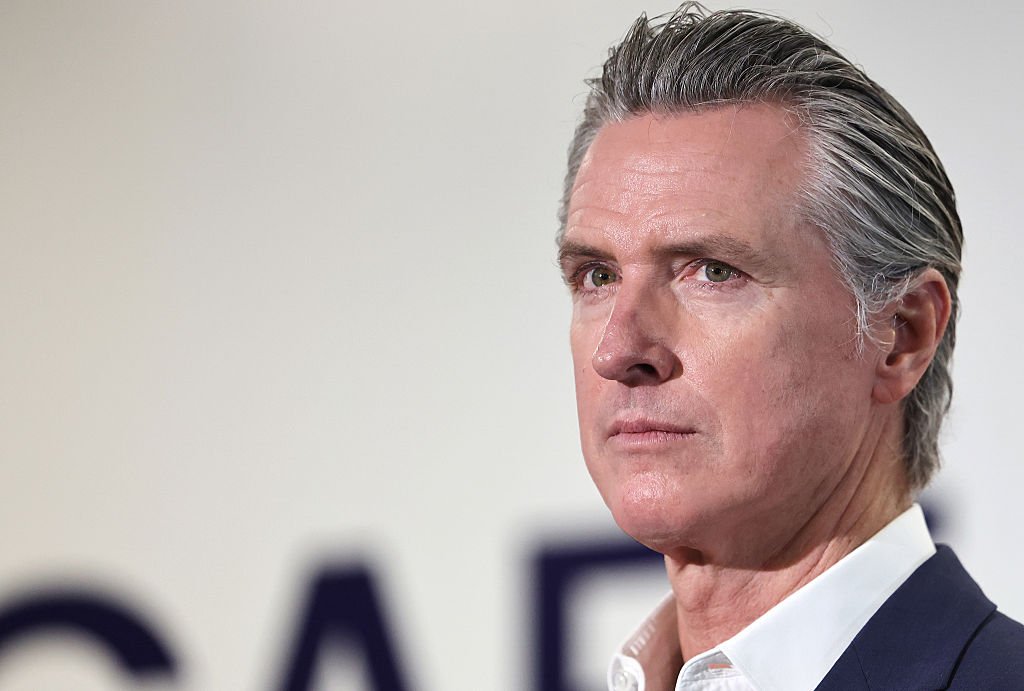

California Governor Gavin Newsom has recently signed a groundbreaking bill, making California the first state in the nation to mandate safety protocols for AI companion chatbots aimed at protecting children and vulnerable users online.

Introducing SB 243: A Shield for Young Users

The newly enacted law, SB 243, aims to safeguard children and other vulnerable users from the potential risks linked to AI companion chatbots. Under this legislation, companies—including major players like Meta and OpenAI as well as emerging startups such as Character AI and Replika—will be held legally accountable for their chatbot operations, ensuring compliance with established safety standards.

Driven by Tragedy: The Catalyst for Change

Introduced by state senators Steve Padilla and Josh Becker, SB 243 gained urgency following the tragic suicide of teenager Adam Raine, who engaged in harmful interactions with OpenAI’s ChatGPT. The bill also addresses alarming revelations about Meta’s chatbots, which were reportedly allowed to engage minors in inappropriate conversations. Additionally, a recent lawsuit against Character AI highlights the real-world implications of unregulated chatbot interactions.

Governor Newsom’s Commitment to Child Safety

“Emerging technology like chatbots and social media can inspire, educate, and connect — but without real guardrails, technology can also exploit, mislead, and endanger our kids,” Newsom stated. “We’re committed to leading responsibly in AI technology, emphasizing that our children’s safety is non-negotiable.”

Key Provisions of SB 243: What to Expect

The new law will take effect on January 1, 2026. It mandates companies to put in place crucial measures like age verification, user warnings about social media interactions, and stronger penalties for producing illegal deepfakes (up to $250,000 per offense). Additionally, companies must develop protocols for dealing with issues related to suicide and self-harm, sharing relevant data with California’s Department of Public Health.

Transparency and User Protection Measures

The legislation stipulates that platforms clarify when interactions are AI-generated, and prohibits chatbots from posing as healthcare professionals. Companies are also required to implement reminders for minors to take breaks and block access to explicit content generated by the chatbots.

Industry Response: Initial Safeguards and Compliance

Some organizations have proactively begun introducing safeguards. OpenAI has rolled out parental controls and a self-harm detection system for its ChatGPT, while Replika, targeting an adult audience, emphasizes its commitment to user safety through extensive content-filtering measures and adherence to regulations.

Collaborative Future: Engaging Stakeholders in AI Regulation

Character AI has commented on its compliance with SB 243, stating that all chatbot interactions are fictionalized. Senator Padilla has expressed optimism, viewing the bill as a vital step toward establishing necessary safeguards for powerful technologies and urging other states to follow suit.

California’s Continued Leadership in AI Regulation

SB 243 is part of a larger trend of stringent AI oversight in California. Just weeks earlier, Governor Newsom enacted SB 53, which requires larger AI companies to boost transparency around safety protocols and offers whistleblower protections for their employees.

The National Conversation on AI and Mental Health

Other states, including Illinois, Nevada, and Utah, have passed legislation to limit or prohibit AI chatbots as substitutes for licensed mental health care. The national discourse around regulation reinforces the urgency for comprehensive measures aimed at protecting the most vulnerable.

TechCrunch has reached out for comments from Meta and OpenAI.

This article has been updated with responses from Senator Padilla, Character AI, and Replika.

Sure! Here are five FAQs regarding California’s regulation of AI companion chatbots:

FAQ 1: What is the new regulation regarding AI companion chatbots in California?

Answer: California has become the first state to implement regulations specifically for AI companion chatbots. This legislation aims to ensure transparency and accountability, requiring chatbots to disclose their artificial nature and provide users with information about data usage and privacy.

FAQ 2: How will this regulation affect users of AI companion chatbots?

Answer: Users will benefit from enhanced transparency, as chatbots will now be required to clearly identify themselves as AI. This helps users make informed decisions about their interactions and understand how their personal data may be used.

FAQ 3: Are there penalties for companies that do not comply with these regulations?

Answer: Yes, companies that fail to comply with the regulations may face penalties, including fines and restrictions on the deployment of their AI companion chatbots. This enforcement structure is designed to encourage responsible use of AI technology.

FAQ 4: What are the main goals of regulating AI companion chatbots?

Answer: The primary goals include protecting user privacy, establishing clear guidelines for ethical AI use, and fostering greater trust between users and technology. The regulation aims to mitigate risks associated with misinformation and emotional manipulation.

FAQ 5: How might this regulation impact the development of AI technologies in California?

Answer: This regulation may drive developers to prioritize ethical considerations in AI design, leading to safer and more transparent technologies. It could also spark a broader conversation about AI ethics and inspire similar regulations in other states or regions.