Revolutionizing Manufacturing: The Rise of Dark Factories in China

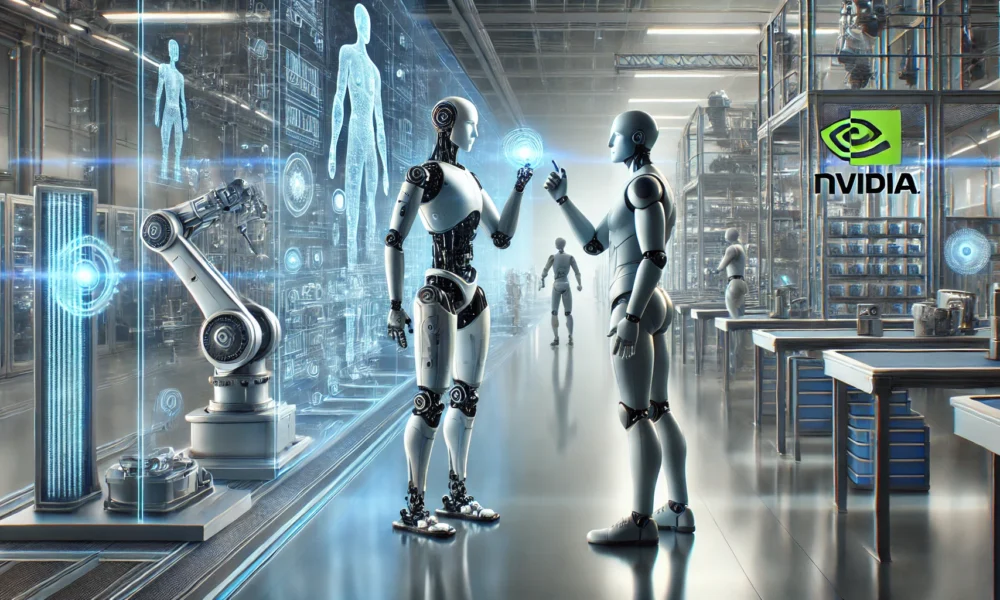

In today’s fast-changing industrial world, AI-driven automation is no longer just a part of the future; it is happening right now. One of the most notable examples of this transformation is the rise of dark factories in China.

Companies like Xiaomi are at the forefront of this transformation, advancing manufacturing efficiency and precision to new levels. However, as this technology continues to grow, it raises crucial questions about the future of work, the potential for job displacement, and how societies will adapt to this new approach to production.

Understanding Dark Factories: The Future of Automated Production

A dark factory is a fully automated production facility without human workers. The term dark factory originates from the fact that these facilities do not require traditional lighting since no humans are on the factory floor. Instead, advanced machines, AI systems, and robotics manage every aspect of production, including assembly, inspection, and logistics.

Xiaomi’s smart factory in Changping exemplifies this new manufacturing paradigm in China. The factory produces one smartphone per second using AI and robotics to achieve exceptional efficiency and precision.

The Impact of AI-Driven Automation on China’s Industrial Landscape

China has become a global leader in industrial automation, driven by its efforts to adopt advanced technologies like AI, robotics, and smart manufacturing. The government invests heavily in these areas to boost the country’s manufacturing power and stay competitive in a fast-changing global market.

This shift is supported by significant government investment. In 2023 alone, China spent $1.4 billion on robotics research and development, accelerating its move toward automation.

Navigating the Future of Work in an AI-Driven Economy

Dark factories are quickly becoming one of the most noticeable signs of AI-driven automation, where human workers are replaced entirely by machines and AI systems. These fully automated factories operate 24/7 without lighting or human intervention and are transforming industries globally.

While automation is eliminating some jobs, it is also creating new opportunities. Roles in AI programming, robotics maintenance, and data analysis are expected to grow.

Embracing Change: Balancing Technology and Human Potential

AI-driven automation is transforming the manufacturing industry, especially in China’s dark factories. While these advancements offer significant gains in efficiency and cost reduction, they raise important concerns about job displacement, skills gaps, and social inequality.

The future of work will require a balance between technological progress and human potential. By focusing on reskilling workers, promoting AI ethics, and encouraging collaboration between humans and machines, we can ensure that automation enhances human labor rather than replaces it.

-

What is AI-driven automation in manufacturing?

AI-driven automation in manufacturing refers to the use of artificial intelligence technologies to automate various processes within factories, such as production, quality control, and maintenance. This can include using AI algorithms to optimize production schedules, identify defects in products, and predict when machines will need maintenance. -

How is AI-driven automation reshaping the future of work in manufacturing?

AI-driven automation is transforming the manufacturing industry by enabling companies to achieve higher levels of efficiency, productivity, and quality. This often means that fewer human workers are needed to perform repetitive or dangerous tasks, while more skilled workers are required to oversee and maintain the AI systems. Overall, the future of work in manufacturing is becoming more focused on collaboration between humans and AI technology. -

What are some benefits of AI-driven automation in manufacturing?

Some benefits of AI-driven automation in manufacturing include increased productivity, improved product quality, reduced human error, and lower operational costs. By using AI technologies to automate tasks that are time-consuming or prone to human error, companies can achieve higher levels of efficiency and reliability in their manufacturing processes. -

What are some potential challenges of implementing AI-driven automation in manufacturing?

Some potential challenges of implementing AI-driven automation in manufacturing include the initial cost of investing in AI technologies, the need for skilled workers to maintain and oversee the AI systems, and the potential for job displacement among workers who are no longer needed for manual tasks. Companies must also consider the ethical implications of using AI technologies in their manufacturing processes. - How can manufacturers prepare for the future of work with AI-driven automation?

Manufacturers can prepare for the future of work with AI-driven automation by investing in training programs for their employees to learn how to work alongside AI technologies, developing clear communication strategies to keep workers informed about changes in their roles, and continuously monitoring and optimizing their AI systems to ensure they are achieving the desired results. It is also important for manufacturers to consider the long-term impact of AI-driven automation on their workforce and to plan for potential changes in job roles and responsibilities.