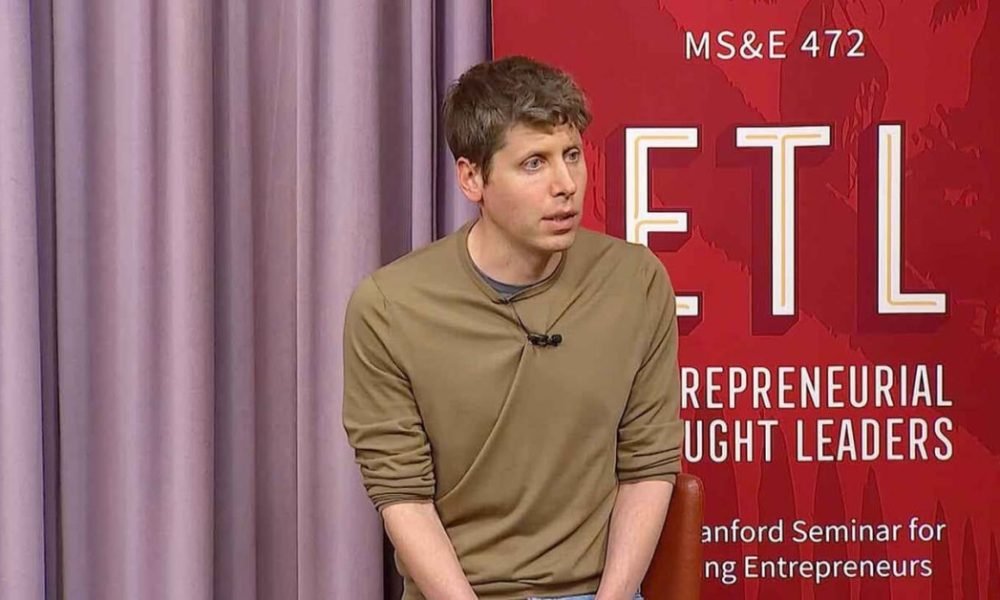

OpenAI’s Sam Altman Plans Major Visit to India Amid AI Summit Buzz

OpenAI CEO Sam Altman is gearing up for his first trip to India in nearly a year this February, coinciding with a high-profile AI summit in New Delhi that will attract tech luminaries from companies like Meta, Google, and Anthropic.

India’s Groundbreaking AI Impact Summit 2026

India is set to host its inaugural significant AI event, the India AI Impact Summit 2026, from February 16 to 20. This summit will assemble global tech titans including Nvidia CEO Jensen Huang, Google CEO Sundar Pichai, and Anthropic CEO Dario Amodei, along with prominent Indian business leaders such as Reliance Industries chairman Mukesh Ambani, as noted on the summit’s website. While Altman’s attendance has not been officially confirmed, his presence is anticipated.

OpenAI’s Strategic Meetings During the Summit

Sources indicate that OpenAI intends to host private discussions during the summit in New Delhi, where Altman is expected to be present. Additionally, an OpenAI event is scheduled for February 19, inviting venture capitalists and industry executives.

Plans Subject to Change Amid Growing Tech Events

Although Altman’s visit has not been publicly announced, plans may still evolve. Several other U.S. companies are also organizing events around the summit. Anthropic will host a developers’ day in Bengaluru on February 16, while Nvidia is planning an evening occasion in New Delhi during the summit week, highlighting the global interest in engaging with India’s vibrant tech ecosystem.

Significance of Altman’s Visit to India

This visit marks Altman’s first return to India in nearly a year, following his previous trip in February 2025. He had expressed intentions to come back later in 2025 after OpenAI announced its new office in New Delhi, yet that trip did not materialize.

India: A Key Growth Market for AI

India is rapidly establishing itself as a critical growth market for American AI companies. Recently, Anthropic opened an office in Bengaluru and appointed former Microsoft India managing director Irina Ghose to lead its local efforts. Concurrently, partnerships between Google and Reliance Jio, as well as Perplexity and Bharti Airtel, are paving the way for expansive AI service offerings to millions of telecom users.

OpenAI Expands Its Footprint in India

OpenAI has been ramping up its operations in India, actively hiring for roles in enterprise sales, technical deployment, and AI regulation. The company is currently seeking talent in New Delhi, Mumbai, and Bengaluru, as India stands out as ChatGPT’s largest market by downloads and its second-largest in terms of users.

Future Goals and Challenges in AI Infrastructure

Altman is expected to engage with tech executives, startup founders, and government officials, as OpenAI aims to enhance ChatGPT’s adoption in the enterprise sector while maintaining its mass-market appeal. The company is also eyeing India as a potential hub for infrastructure growth, especially given the recent multi-billion-dollar investments from Google and Microsoft aimed at expanding their AI and cloud operations in the region.

Indian Government’s Aspirations for AI Investments

Amidst these developments, the Indian government is optimistic that the forthcoming summit will solidify India’s position as a prime destination for substantial AI investments. The country’s IT minister stated that the event could lead to an influx of up to $100 billion in funding, with initiatives aimed at urging local startups to develop AI solutions tailored for domestic demands.

At the time of writing, OpenAI, India’s IT ministry, and the summit organizers have not responded to requests for comments.

Here are five FAQs based on the topic of Sam Altman’s planned visit to India as AI leaders gather in New Delhi:

FAQ 1: Why is Sam Altman visiting India?

Answer: Sam Altman is visiting India to participate in discussions and events focused on artificial intelligence, engaging with AI leaders and innovators to explore collaboration opportunities and advancements in the field.

FAQ 2: What events will take place during this visit?

Answer: The visit will likely include conferences, panel discussions, and networking events focusing on AI development, ethics, policy-making, and the role of technology in shaping future economies.

FAQ 3: Who are the other AI leaders expected to attend the events in New Delhi?

Answer: While specific names haven’t been confirmed, notable figures from various sectors including academia, industry, and government, as well as leaders from major tech companies, are expected to participate in the discussions.

FAQ 4: How does this visit impact India’s AI landscape?

Answer: Altman’s visit could enhance India’s AI landscape by fostering international partnerships, attracting investment, and sharing best practices, which might accelerate the growth and innovation in the region’s tech ecosystem.

FAQ 5: What topics are likely to be discussed during these gatherings?

Answer: Key topics may include the future of AI technology, ethical considerations, regulatory frameworks, collaboration between countries, and strategies for leveraging AI for economic development and social good.