Intel’s Q3 Earnings Report: A Surprising Turnaround for the Semiconductor Giant

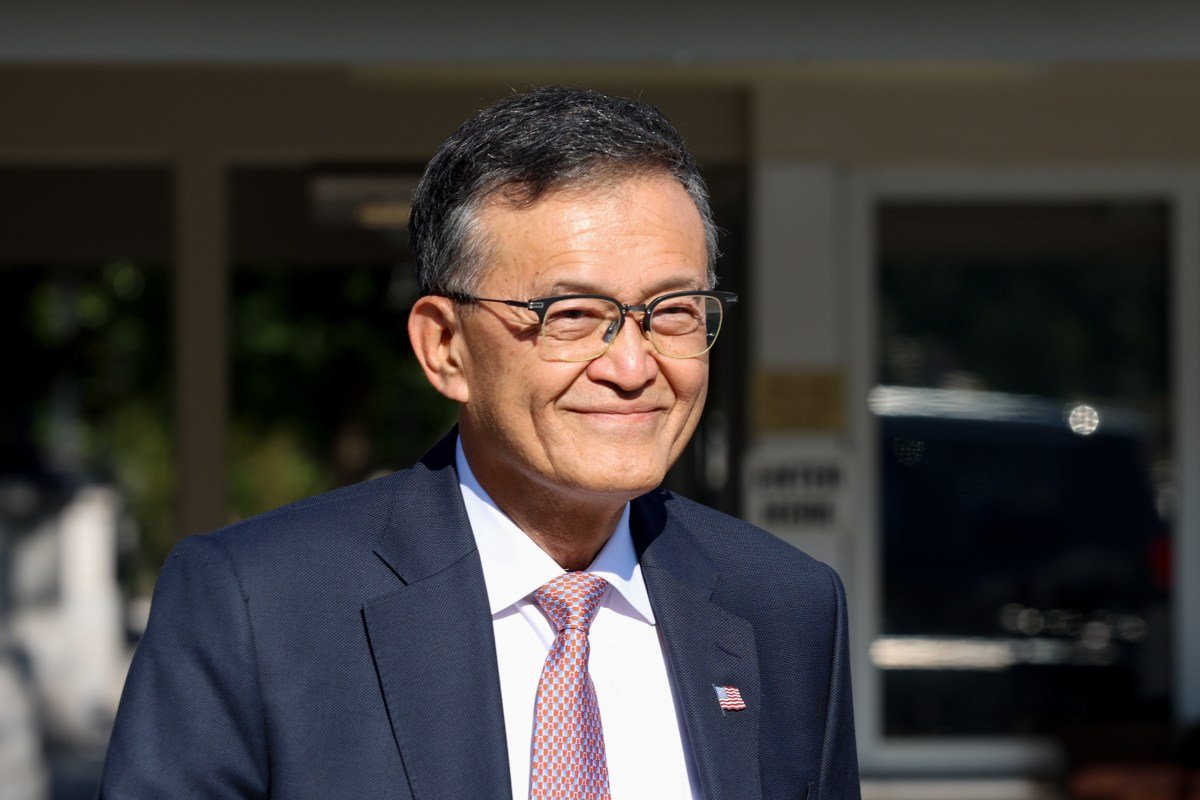

Intel’s third-quarter earnings exceeded Wall Street forecasts, driven by increased revenue and significant cost cuts. CEO Lip-Bu Tan aims to revitalize the struggling semiconductor leader with substantial investments in recent months.

$4.1 Billion in Net Income Signals Recovery

Intel’s latest revenue figures, coupled with $4.1 billion in net income, paint a brighter picture compared to its previous series of quarterly losses. However, the narrative of recovery also includes extensive cost-cutting measures, including layoffs, alongside high-profile investments from SoftBank, Nvidia, and the U.S. government.

Strong Investments Boost Confidence

During the third quarter, Intel added $20 billion to its balance sheet, a move that sent its stock soaring. This growth was largely fueled by three major investments over the past three months.

Notable Investments Strengthening Intel

In August, SoftBank invested $2 billion, followed closely by the U.S. government’s unprecedented 10% equity stake in Intel. So far, the company has received $5.7 billion of the planned $8.9 billion from this investment. Additionally, Nvidia purchased a $5 billion stake in Intel as part of a collaborative chip development agreement.

Strategic Moves Bolster Future Prospects

“The actions we implemented have strengthened our balance sheet, offering us greater operational flexibility and enabling us to execute our strategy with confidence,” Tan remarked during the earnings call. “I am particularly grateful for the trust shown by President Trump and Secretary [Howard] Lutnick, as it underscores Intel’s strategic significance as the only U.S.-based semiconductor company excelling in cutting-edge logic and R&D.”

Significant Transactions Enhance Financial Position

Intel also obtained $5.2 billion from the sale of its ownership in Altera, finalized on September 12, a company it has owned since 2015. The company has also divested its stake in Mobileye, an autonomous driving technology firm.

Quarterly Revenue on the Rise

Intel reported an $800 million increase in quarterly revenue, totaling $13.7 billion compared to $12.9 billion from the previous year. This marks a significant turnaround, with a net income of $4.1 billion in the third quarter as opposed to the $16.6 billion loss reported in the same quarter last year.

Challenges Ahead: The Foundry Business

Despite a robust quarter, detailed plans for Intel’s foundry business, which manufactures custom chips for clients, remain scarce. Under Tan’s leadership, this segment has faced challenges and has been the subject of significant layoffs.

Government Investment Tied to Foundry Strategy

The importance of the foundry business has not gone unnoticed by the Trump administration, which has made it clear that a key condition of its investment includes prohibiting Intel from departing from its foundry operations for the next five years.

Wall Street Watching for Long-Term Growth Indicators

Analysts on Wall Street are closely monitoring Intel’s foundry division for signs of sustainable growth. In discussions with TechCrunch, analysts emphasized that Intel does not require cash for its recovery, but a robust strategy for their foundry segment is crucial.

Future of Foundry Business Looks Promising

Tan expressed confidence in the foundry business’s potential to meet rising chip demand, although specifics were limited. He noted, “Building a world-class foundry is a long-term endeavor built on trust.” He emphasized the need to delight customers and meet their diverse requirements for performance, yield, cost, and schedules.

Here are five FAQs with answers regarding Intel’s recovery and its foundry business:

FAQ 1: What is meant by Intel’s recovery?

Answer: Intel’s recovery refers to the company’s efforts to overcome operational and competitive challenges it has faced in recent years. This includes investments in technology, enhancing manufacturing capabilities, and improving product lineup to regain market leadership in semiconductor manufacturing.

FAQ 2: Why is Intel focusing on its foundry business?

Answer: Intel is focusing on its foundry business to diversify its revenue streams and leverage its manufacturing capabilities to support both internal and external clients. This strategy aims to position Intel as a key player in the foundry space, addressing the growing demand for semiconductor manufacturing.

FAQ 3: What role does the foundry business play in Intel’s strategy?

Answer: The foundry business is central to Intel’s strategy as it allows the company to capitalize on lucrative contracts with other chipmakers. By providing manufacturing services, Intel aims to improve its financial stability and become a major player in the competitive foundry market, particularly as demand for semiconductors continues to rise.

FAQ 4: How is Intel improving its foundry operations?

Answer: Intel is investing heavily in expanding its manufacturing facilities, enhancing production processes, and adopting advanced technologies. These improvements include plans for new fabs (fabrication plants) and partnerships that can increase capacity and bring cutting-edge processes to their foundry offerings.

FAQ 5: What impact could Intel’s foundry focus have on the semiconductor industry?

Answer: If successful, Intel’s focus on its foundry business could reshape the semiconductor landscape by providing more competitive options for companies looking to outsource chip manufacturing. This could lead to increased innovation, improved product availability, and greater cost efficiency within the industry.