Hinge Launches AI-Powered “Convo Starters” to Spark Meaningful Conversations

Many daters on Hinge are expressing their frustration with matches who simply like their profiles without initiating conversations. This often causes an uncomfortable silence, placing the onus on one person to break the ice. Unfortunately, many resort to clichéd lines or mundane small talk, such as “How are you?”

Revolutionizing Connections with AI

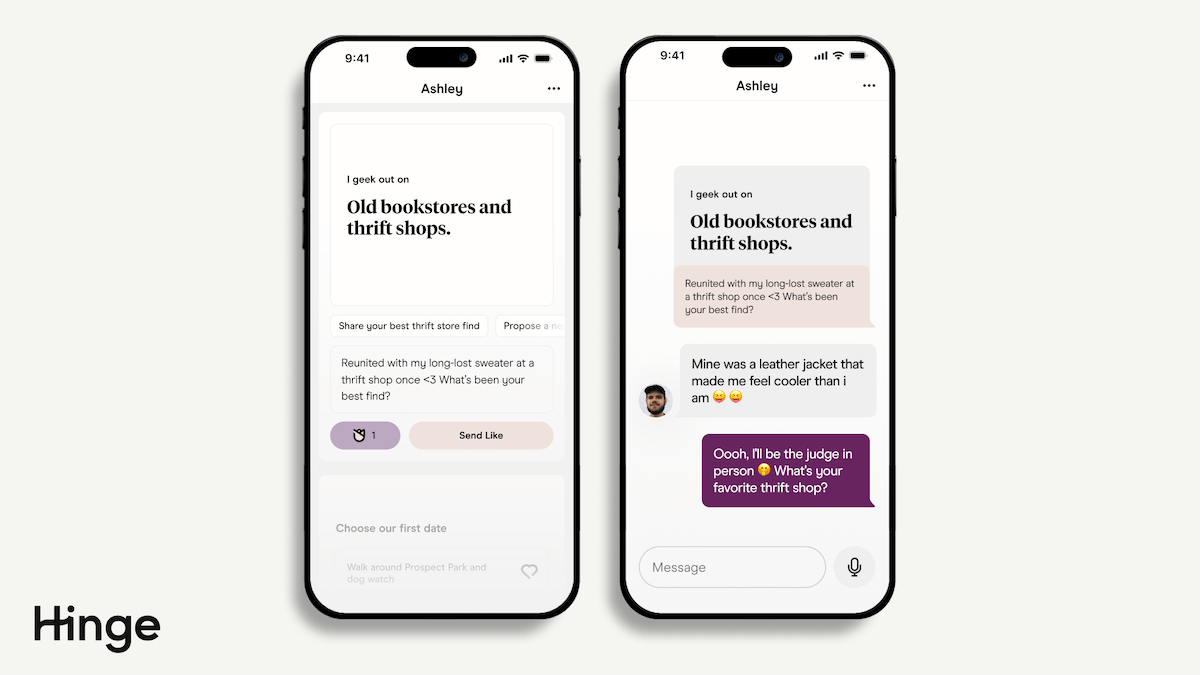

To tackle this issue, Hinge has introduced “Convo Starters,” an innovative feature powered by AI that offers personalized suggestions for opening dialogues.

Empowering Daters with Tailored Suggestions

This feature aims to inspire users and bolster their confidence when sending initial messages. When users like a profile, they’ll now see three customized tips beneath each photo and prompt. The AI evaluates a user’s profile and generates recommendations based on individual images or prompts. For instance, if a potential match is shown playing chess, Hinge might suggest starting the conversation with a query about board games.

Backed by User Insights

The launch of Convo Starters is a response to user feedback. Hinge’s research revealed that 72% of its users are more likely to engage with someone when a like is paired with a message. The data shows that users who comment alongside their likes are twice as likely to secure dates.

Continuing the AI Evolution

This feature builds on the introduction of Hinge’s AI-driven Prompt Feedback, which assesses user prompts and provides tailored advice to enhance them, encouraging users to share more engaging details about their lives.

Addressing User Concerns

Despite the benefits of AI features, many users—particularly Gen Z—express discomfort with AI in online dating. A Bloomberg Intelligence survey indicates that Gen Z is more hesitant than older generations about using AI for tasks such as crafting profile prompts and responding to messages.

Investing in the Future of AI Dating

Hinge’s parent company, Match Group, is committing approximately $20 million to $30 million towards advancing its AI initiatives.

Join us at the TechCrunch event

San Francisco

|

October 13-15, 2026

Sure! Here are five FAQs about Hinge’s new AI feature designed to enhance dating conversations:

1. What is Hinge’s new AI feature?

Answer: Hinge’s new AI feature assists users in crafting engaging responses and prompts, helping them move beyond typical small talk, thereby fostering deeper connections. It generates tailored suggestions that enhance conversation flow based on user interests and preferences.

2. How does the AI suggest conversation topics?

Answer: The AI analyzes user profiles, personal interests, and past conversation patterns to suggest relatable topics or engaging questions. This ensures that the prompts feel personalized and relevant, making it easier for users to connect on a more meaningful level.

3. Can I customize the AI suggestions?

Answer: Yes! Users have the option to refine AI-generated prompts based on their preferences. You can specify the type of conversations you enjoy or indicate topics you’d like to avoid, allowing for a more tailored dating experience.

4. Is using the AI feature free?

Answer: The AI feature is integrated into Hinge’s app and is available to both free and premium users. While some advanced functionalities may require a subscription, the core features designed to assist in engaging conversation are accessible to all users.

5. Will the AI take over my conversations?

Answer: No, the AI is designed to assist, not replace. It offers suggestions and prompts to enhance your interactions, but users maintain full control over their conversations. You can choose to use the AI’s suggestions or continue chatting in your own style.

Feel free to ask if you would like more information!