OpenAI Launches Free ChatGPT Go Plan in India for One Year

OpenAI is offering a complimentary one-year ChatGPT Go plan to users in India who register during a limited promotional period starting November 4, aiming to strengthen its foothold in a key market.

Limited-Time Offer for New and Existing Users

On Tuesday, OpenAI announced this enticing promotion, although the duration of the offer remains unspecified. Even existing ChatGPT Go subscribers in India will qualify for the free 12-month plan.

Affordable Subscription Options

ChatGPT Go was introduced in India in August for under $5 per month, marking OpenAI’s most budget-friendly paid subscription. The service has since extended to Indonesia and, earlier this month, to 16 additional Asian countries.

India: A Prime Market for OpenAI

With over 700 million smartphone users and more than a billion internet subscribers, India stands as a crucial market for OpenAI. The company opened its New Delhi office in August and is focusing on building a local team for enhanced presence.

Challenges and Opportunities

Although India has been highlighted as OpenAI’s second-largest market after the U.S., generating revenue through ChatGPT’s paid plans has proved challenging. Despite over 29 million app downloads in the 90 days leading to August, the app accrued just $3.6 million in in-app purchases, according to data reviewed by TechCrunch.

Enhanced Features with ChatGPT Go

ChatGPT Go offers tenfold usage over the free version for generating responses, creating images, and uploading files. Moreover, it boasts improved memory capabilities for personalized responses, as noted by OpenAI.

Inspirational User Adoption

“Since our initial launch of ChatGPT Go in India, the creativity and adoption from our users have been remarkable,” stated Nick Turley, VP and Head of ChatGPT. “We are eager to witness the amazing creations and learning that will emerge from our user community.”

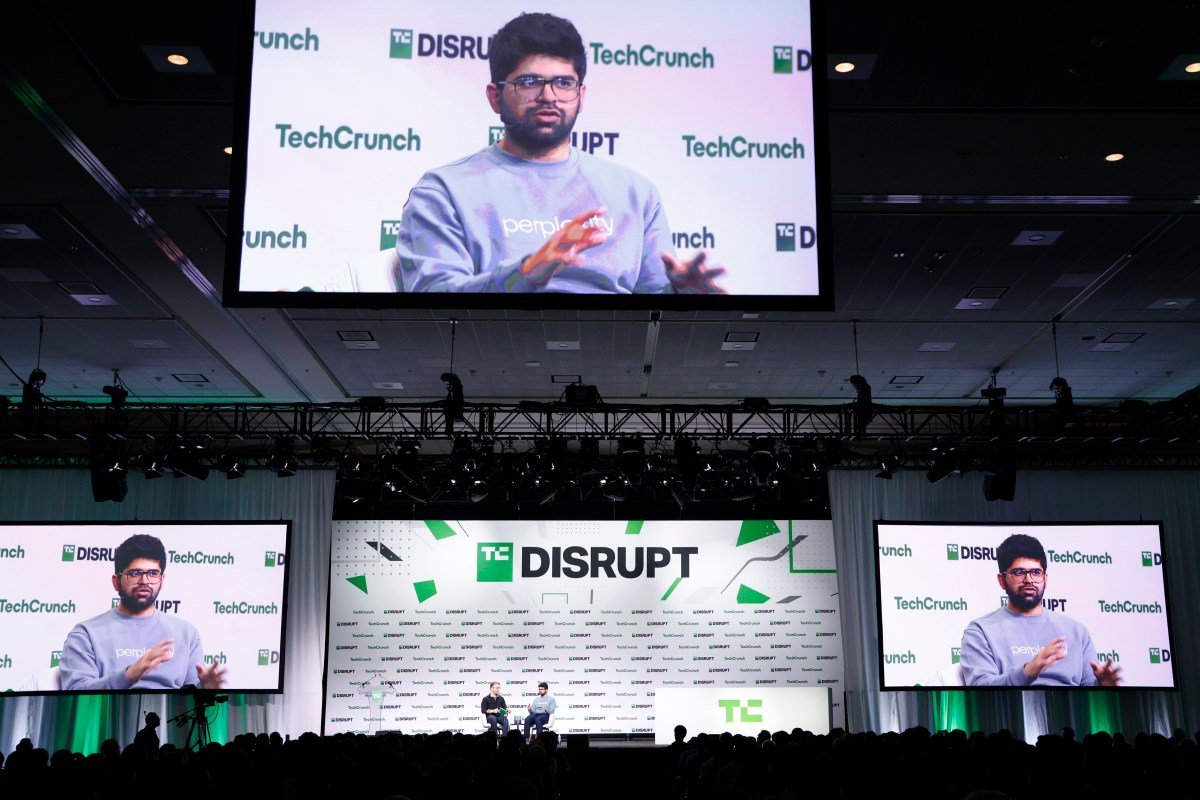

Competition in the Indian Market

OpenAI faces competition from rivals such as Perplexity and Google, both looking to leverage India’s youthful user base. Perplexity has recently partnered with Airtel to offer free Perplexity Pro subscriptions to 360 million subscribers. Meanwhile, Google has launched a free one-year AI Pro plan for students in India.

Upcoming Announcements and Events

OpenAI is set to host its DevDay Exchange developer conference in Bengaluru on November 4, where it is expected to announce India-specific updates aimed at local developers and enterprises. With millions of daily users, India has quickly become one of the fastest-growing markets for ChatGPT.

Here are five FAQs regarding OpenAI’s offer for free ChatGPT Go for one year to users in India:

FAQ 1: What is ChatGPT Go?

Q: What is ChatGPT Go?

A: ChatGPT Go is an enhanced version of OpenAI’s language model designed for a more interactive and responsive user experience. It provides users with advanced functionalities for a wide range of applications, from casual conversation to professional assistance.

FAQ 2: How can I access free ChatGPT Go?

Q: How can I access the free ChatGPT Go offer?

A: Users in India can access the offer by signing up for ChatGPT on the official OpenAI website or app. Once registered, you will receive complimentary access to ChatGPT Go for one year.

FAQ 3: Are there any limitations to the free offer?

Q: Are there any limitations to the free one-year access?

A: While the offer provides full access to ChatGPT Go, certain features might have usage limits to ensure fair access for all users. Check OpenAI’s website for specific details about any restrictions that might apply.

FAQ 4: What happens after the one year is over?

Q: What happens after my free year of ChatGPT Go expires?

A: After the one-year period, users may have the option to subscribe to a paid plan to continue accessing ChatGPT Go and its features. OpenAI will provide details on subscription plans and pricing closer to the expiration date.

FAQ 5: Is this offer available only to new users?

Q: Is this offer available only to new users?

A: The free offer for ChatGPT Go is available to all users in India, including both new and existing users. Existing users simply need to log into their accounts to claim the offer.